This paper presents a brief overview of the progress that has been made in autonomous robots during the past few years. It presents the fundamental problems that have been addressed to enable the successful deployment of robotic automation in industrial environments. It also describes some of the challenges facing future autonomous applications in more complex scenarios, such as urban vehicle automation.

Initial implementations of robotics manipulators began in the late 1950s, with applications in automotive manufacturing. Hydraulic systems were then replaced by electrical motors, making the robots more agile and controllable. The robots were initially used in very constrained and repetitive tasks, such as welding. They were controlled based on internal kinematics, with no sensing information about the current state of the environment. The first innovation in this area started in the early 1980s, with the introduction of visual feedback provided by cameras. Several different sensor modalities were also added to monitor and interact with the environment, such as lasers and force sensors. Nevertheless, most of the work with manipulators was performed within a fixed area of operation. In such cases, there was almost no uncertainty regarding the location of the robot, and the external environment was very well modeled and understood.

A very different scenario occurs when a robot is required to move around within its environment. Two new capabilities become essential to address this problem: positioning and perception. A robot moving within a working area needs to localize—that is ,to know its position and orientation with respect to a navigation frame.In addition, it needs to have a very good representation of the area in proximity in order to move safely without colliding with other objects.

The first successful demonstrations of mapping and localization were implemented in indoor environments, and mostly used ultra-sonic sensor information to obtain high-definition maps[1,2]. This process consists of building a navigation map by moving a robot within the environment under manual operation, and then using this map to localize the robot when working autonomously. The next breakthrough demonstrated that these two processes could be done simultaneously, and thereby initiated a very active area of research known as simultaneous localization and mapping(SLAM)[3,4]. These new algorithms enabled the concurrent building of a map and localization while exploring a new area, and facilitated the deployment of large indoor autonomous applications.

The first major impact of autonomous technology in outdoor environments was in field robotics, which involves the automation of large machines in areas such as stevedoring(Fig.1), mining,and defense[5].

《Fig. 1》

Fig.1.Fully autonomous straddle carriers operating in the port of Brisbane,Australia.

The successful deployment of this technology in field robotics required the assurance that a machine would always be under control, even if some of its components failed. This required the development of new sensing technology based on a variety of sensor modalities such as radar and laser. These concepts were essential for the development of high-integrity navigation systems[6,7]. Such systems,as discussed in Ref.[5], include sensors that are based on different physical principles in order to ensure that no two sensor modalities can fail at the same time. Similar principles were implemented in other areas, such as mining, utilizing the concept of an "island of automation”—that is, an area where only autonomous systems are allowed to operate. This fundamental constraint was essential for the successful development and deployment of autonomous systems in many industrial operations.

Machine learning techniques have started to play a significant role in field robotic automation.During the last five years, we have seen a significant number of very successful demonstrations using a variety of supervised and unsupervised machine learning algorithms. Some of the more impressive applications are in agriculture(Fig.2). It is common now to train a vision-based system to classify and differentiate crops from weeds,monitor the health of a crop, and monitor soil conditions in an automatic and remote manner.

《Fig. 2》

Fig.2.Applications of intelligent robots in agriculture.

The interaction of autonomous robots with people and other manually operated machines is a much more complex problem. One of the hottest areas in R&D is the operation of autonomous vehicles (AVs) in urban environments (Fig.3). An AV must be able to interact with a dynamically changing world in a very predictable and safe manner. Its perception system is responsible for providing complete situational awareness around the vehicle under all possible environmental conditions, including the position of all fixed and mobile objects in proximity to the vehicle.Furthermore, safe AV operation requires the estimation of the intentions of other drivers and of pedestrians in order to be able to negotiate future maneuvers and plan accordingly[8,9].

《Fig. 3》

Fig.3.Autonomous connected electrical vehicles operating on a university of Sydney campus.

Most vehicle manufacturers and research institutions are currently investing significant resources into introducing this technology within the next few years. This has accelerated progress in all areas related to autonomy, including the development of new algorithms and the design of low-cost sensing capabilities and computational power.

Significant progress has been made in perception by utilizing a variety of sensors such as lasers,radar,cameras,and ultrasonic devices. Each sensor modality has advantages and disadvantages, and any robust deployment must use a combination of sensor types in order to achieve integrity.

All sensor modalities will have failure modes, which may be due to various circumstances such as weather or other environmental conditions. It is well known that although cameras can obtain very good texture information for classification purposes, they do not always show a satisfactory performance under heavy rain,snow,or heavy dust.L asers can provide very good range information and are more robust to rain. Nevertheless, they can have catastrophic faults under steam, heavy dust, or smoke. Radar is well known to be robust to all weather-related environmental conditions; however,it lacks the resolution and discrimination capabilities of other perception modalities. Fundamental and applied research efforts are currently directed at fusing different sensor modalities in order to guarantee integrity under all possible working conditions.

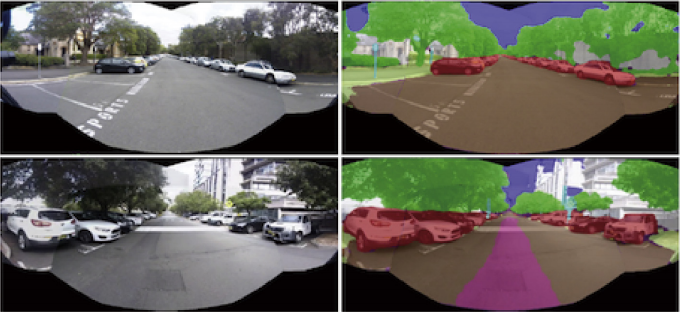

Another area that has seen enormous progress is deep learning.T he availability of large computational and memory resources has enabled the training of high-dimensional models with a large amount of data. The fundamental advantage of deep learning is that there is no need to engineer features to train the models. One very impressive application of this technology is automatic labeling in vision sensing, which is usually referred to as semantic labeling (Fig.4). These methods use a large amount of data to train a convolutional neural network to automatically classify every pixel in an image to correspond to a class within a possible set. One of the advantages of these networks is that they can be retrained for use in other scenarios with relatively low computational effort. This is usually known as "transfer of learning”[10,11]. These techniques are now part of the most sophisticated advanced driver-assistance systems (ADASs) and autonomous road vehicle implementations.

《Fig. 4》

Fig.4. Original Images(left),semantic labeling of objects (top right), and the vehicle-inferred path (bottom right).

Fundamental challenges still exist in vehicle automation, such as positioning,perception integrity, interaction with manually driven vehicles and with pedestrians,and safe validation of AV technology.

(1) Positioning: AVs require a level of positioning accuracy that can only be achieved by using premade high-definition maps. The process of building and maintaining these maps is very challenging, since maps must be robust and must be able to scale for availability all around the country or the world.

(2) High-integrity perception: Current implementation can only operate under reasonably good weather and environmental conditions. Typical sensors used for perception, such as vision and laser sensors,could have catastrophic faults when operating under dense fog, snow, or dust.

(3) Learning how to drive: Driving is a multiagent game in which all participants interact and collaborate in order to achieve their individual goals. This capability is still very difficult for robot, since it requires inferring the intentions of all interacting participants and possessing the necessary negotiation skills in order to make decisions in a safe and efficient manner.

(4) Validation of AVs: The current state of AV technology has demonstrated that it is possible to deploy AVs for operation in urban road environments. It is much more difficult to demonstrate that AVs can operate safely under all possible traffic scenarios. A comprehensive work in this area is presented in Ref. [12], where the authors acknowledge that there will always be accidents involving AVs; however, those authors propose the identification of a set of normal vehicle behaviors to ensure that an AV will never be the cause of an accident.

This work presented a brief overview of the evolution of robotics automation. The last few years have seen the advent of very large computational and memory resources, new sensing capabilities, and significant progress in machine learning. It is very clear that these technologies are enabling a whole new set of autonomous applications that will be part of our lives in the very near future.

The current issue of this journal presents robotic automation applications in road vehicles and future biosyncretic robots. It also includes papers addressing actuators and intelligent manufacturing.

京公网安备 11010502051620号

京公网安备 11010502051620号