《1. Introduction》

1. Introduction

The aim of intelligent manufacturing is to establish flexible and adaptive manufacturing operations locally or globally by using integrated information technology (IT) and artificial intelligence (AI) that can combine advanced computing power with manufacturing equipment. Intelligent manufacturing depends on the timely acquisition, distribution, and utilization of real-time data from both machines and processes on manufacturing shop floors [1] and even across product life-cycles. Effective information sharing can improve production quality, reliability, resource efficiency, and the recyclability of end-of-life products. Intelligent manufacturing built on digitalization is also intended to be more sustainable and to contribute to the factories of the future. However, intelligent manufacturing depends extensively on AI. To better grasp the future of intelligent manufacturing, it is necessary to understand AI. This paper provides the author’s perspective on AI from intelligence science to intelligent manufacturing.

《2. A brief history of AI》

2. A brief history of AI

AI is a branch of intelligence science. The field of intelligence science broadly covers two areas: natural intelligence and artificial intelligence. Natural intelligence is the science of discovering the intelligent behaviors of living systems, while artificial intelligence, or AI, is both the science and the engineering of making intelligent software systems and machines. These two research areas have contributed to each other over decades. Advancements in natural intelligence have laid a solid foundation for AI research on artificial neural networks (ANNs), genetic algorithms (GAs), ant colony optimization (ACO), etc., while advanced AI tools have helped to speed up discoveries in natural intelligence [2]. Because of the relatively short history of AI, research in this field is still active, promising, and yet to be discovered further, such as in the context of manufacturing.

Before discussing intelligent manufacturing, it is necessary to briefly review the history of AI, as summarized in Fig. 1. The history of AI can be traced back to the early 1940s. The first AI was a binary ANN model created by Warren McCulloch and Walter Pitts of the University of Illinois in 1943 [3]. Although their model only considered the binary state (i.e., on/off for each neuron), it served as a basis for rapid ANN research in the late 1980s. In 1950, British mathematician Alan Turing proposed the well-known Turing Test [4] to determine whether machines can think. The Turing Test is performed through computer communication involving one examiner, one human, and one machine (i.e., computer) in separate rooms. The examiner can ask any questions. If the examiner cannot distinguish the machine from the human on the basis of their answers, the machine passes the test. In 1951, Marvin Minsky and Dean Edmonds, two graduate students from Princeton University, built the first neuron computer to simulate a network of 40 neurons [5].

《Fig. 1》

Fig. 1. A brief history of AI.

An important milestone in AI development was the first AI workshop [6], which was held in 1956 at Dartmouth College by John McCarthy. This workshop marked the end of the ‘‘dark age” and the beginning of ‘‘the rise of AI” in AI history. The term ‘‘artificial intelligence”, suggested by McCarthy, was agreed upon at that time and is still in use. McCarthy later moved to Massachusetts Institute of Technology (MIT); in 1958, he defined the first AI language, LISP, which is still used today. One of the most ambitious projects in this area was the General Problem Solver (GPS) [7], which was created in 1961 by Allen Newell and Herbert Simon of Carnegie Mellon University. The GPS is based on formal logic and can generate an infinite number of operators attempting to find a solution; however, it is inefficient in solving complicated problems. In 1965, Lotfi Zadeh of UC Berkeley published his famous paper ‘‘Fuzzy sets” [8], which is the foundation of fuzzy set theory. The first expert system, DENDRAL [9], was developed at Stanford University in 1969 in a project that was funded by the National Aeronautics and Space Administration (NASA) and led by Joshua Lederberg, a Nobel Prize laureate in genetics. At that time, however, because most AI projects could only handle toy problems rather than real-world ones, many projects were canceled in the United States, United Kingdom, and several other countries. AI research entered into a so-called ‘‘AI winter.”

Despite these funding cuts, AI research continued. In 1969, Bryson and Ho [10] proposed the basis of back-propagation for neural network learning. Furthermore, the first GA was proposed in 1975 by John Holland of the University of Michigan, who used selection, crossover, and mutation as genetic operators for optimization [11]. In 1976, MYCIN [12] was developed by the same group as DENDRAL at Stanford University. MYCIN, which is a rulebased expert system for blood disease diagnosis using 450 if-then rules, was found to perform better than a junior doctor.

After 30 years, work on neural networks was taken up again in the field of AI. A new period—in which AI became a science—began in 1982, when John Hopfield published his Hopfield networks [13], which remain popular today. In 1986, back-propagation became a real implemented learning algorithm [14] in ANN, 16 years after it was proposed. It also triggered the start of distributed AI (DAI) through parallel distributed processing. After 22 years, fuzzy set theory or fuzzy logic was successfully built into dishwashers and washing machines in 1987 by Japanese companies. In 1992, genetic programming [15] was proposed by John Koza to manipulate a symbolic code representing LISP programs. Based on the ideas of DAI and artificial life, intelligent agents gradually took shape in the mid-1990s. In the late 1990s, hybrid systems of fuzzy logic, ANN, and GA became popular for solving complex problems. More recently, various new AI approaches have come into being, including ACO, particle swamp optimization (PSO), artificial immune optimization (AIO), and DNA computing. The potential of AI in the future—such as in manufacturing—remains unpredictable.

The first popular AI tool was probably the AI-based chessplaying computer program Deep Blue [16], which was created by International Business Machines Corporation (IBM). When Garry Kasparov, the world chess champion at that time, played with Deep Blue in 1997 in an exhibition match, he lost the match to Deep Blue by 2.5 to 3.5. Another early example is the Honda ASIMO robot in 2005, which was able to climb stairs. For a robot to move in an unstructured environment and be commanded by a human, it requires the abilities of natural language processing, computer vision, perception, object recognition, machine learning, and motion control at runtime. More recently, in 2016, AlphaGo [17] of DeepMind beat the world Go champion Lee Sedol in four out of five games using cloud computing, reinforcement leaning, and a Monte Carlo search algorithm combined with a deep neural network for decision-making. Its newer version, AlphaGo Zero [18], surpassed the ability of AlphaGo in just three days through selflearning from scratch. Today, AI techniques and systems can be found in every field from chess playing to robot control, disease diagnosis to airplane autopilots, and smart design to intelligent manufacturing. In addition to the AI techniques summarized in Fig. 1, machine learning and deep learning show a great deal of promise for intelligent manufacturing.

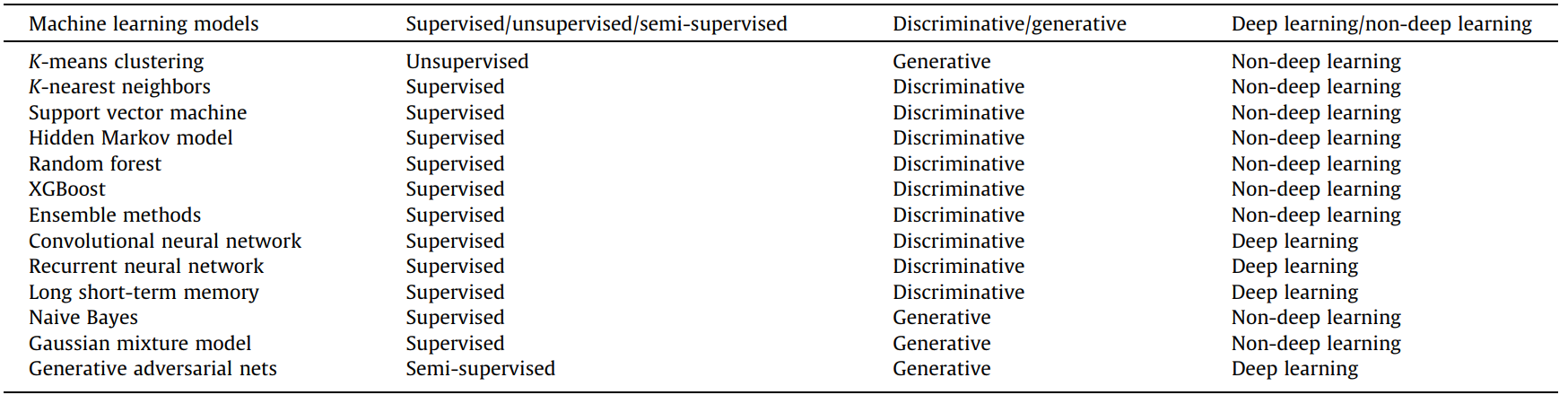

Table 1 classifies typical machine learning models based on whether they are supervised or unsupervised, discriminative or generative, and deep learning or non-deep learning.

《Table 1》

Table 1 Typical machine learning models.

《3. Representative examples of AI in manufacturing》

3. Representative examples of AI in manufacturing

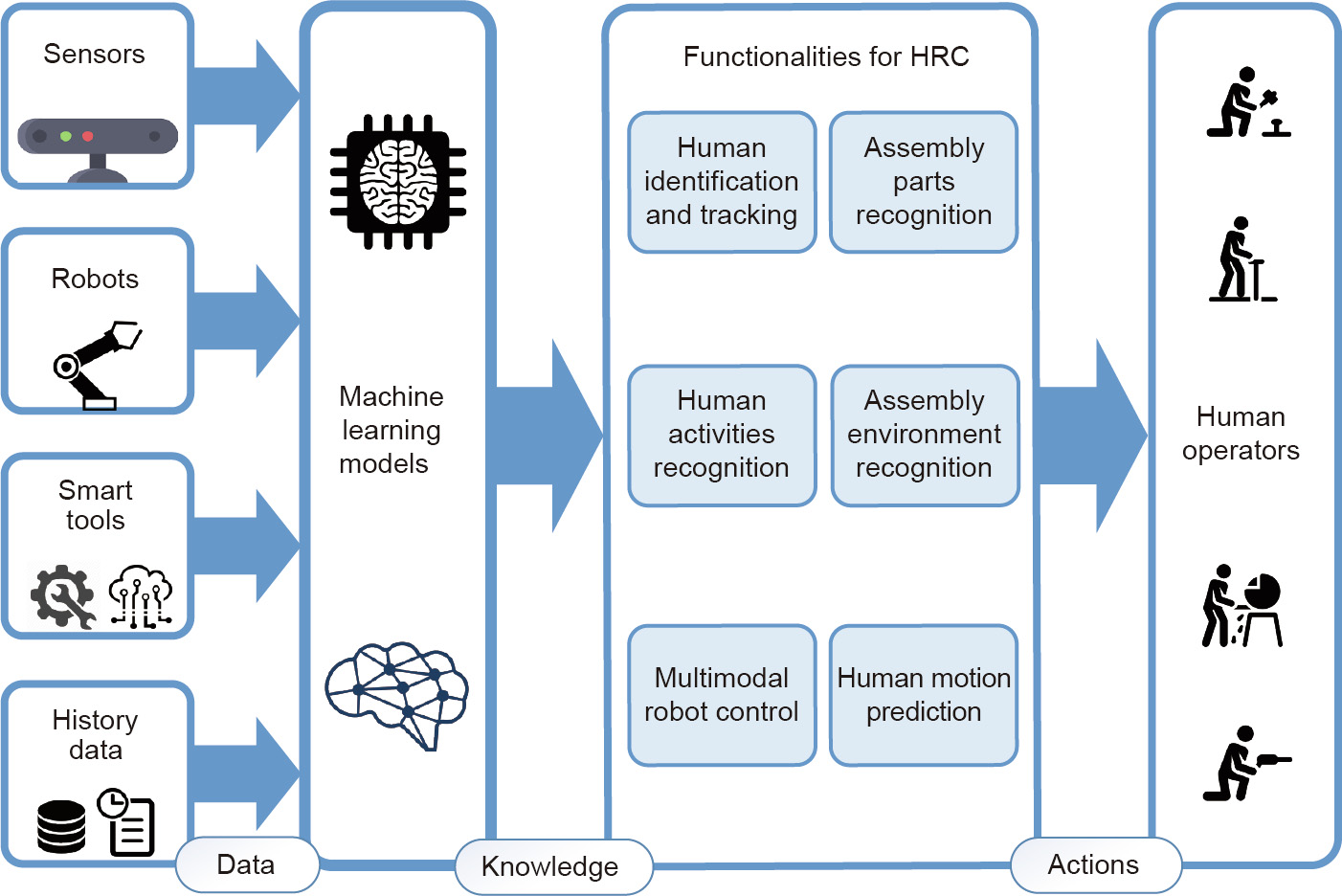

In the context of manufacturing, intelligence science—or, more specifically, AI in the form of machine learning models— contributes to intelligent manufacturing. Fig. 2 depicts one scenario of human–robot collaboration (HRC) in which data from sensors and field devices are transformed to knowledge after the application of appropriate machine learning models [19]. Knowledge is further transformed into actions using domain-specific HRC decision modules. Consequently, human operators can work with robots safely in an immersive environment, while the robots can predict what the humans will do next and provide in situ assistance as needed [20,21].

《Fig. 2》

Fig. 2. Machine learning in intelligent manufacturing.

Brain robotics [22] is another example of adaptive robot control using the brainwaves of experienced human operators. Rather than following the data–knowledge–action chain, a brainwave–action progression can be realized by mapping human brainwave patterns to robot control commands through proper training, as shown in Fig. 3. A 14-channel EMOTIV EPOC+ device (EMOTIV, USA) is used in this case to collect human brainwave signals. The matching commands after signal processing are then passed on to the robot controller for adaptive execution.

《Fig. 3》

Fig. 3. Brain robotics HRC. Reproduced from Ref. [22] with permission of Elsevier, © 2018.

《4. Opportunities and challenges》

4. Opportunities and challenges

Enabled by AI and the latest IT technologies such as cloud computing, big data analytics, the Internet of Things (IoT), and mobile Internet/5G, numerous opportunities for intelligent manufacturing lie ahead. These new technologies will facilitate real-time information sharing, knowledge discovery, and informed decision-making in intelligent manufacturing, as follows:

• The IoT provides better connectivity of machines and field devices for data collection, thereby making real-time data collection possible.

• Mobile Internet/5G makes it practical to transmit a large amount of data in ultra-low latency for real-time information sharing.

• Cloud computing offers rapid and on-demand data analysis; it also helps store data, which can be easily shared with authorized users.

• Big data analytics can reveal hidden patterns and meaningful information in data so as to convert data into information and further transform information into knowledge.

For example, new opportunities in intelligent manufacturing may include: ① remote real-time monitoring and control with little delay, ② defect-free machining by means of opportunistic process planning and scheduling, ③ cost-effective and secure predictive maintenance of assets, and ④ holistic planning and control of complex supply chains. Moreover, intelligent manufacturing in the near future will benefit from the aforementioned technologies in different temporal scales, as follows:

• Better horizontal and vertical integrations in five years may remove the gaps between automation islands by 80% in general, mainly enabled by the IoT and mobile Internet.

• In ten years, experience-driven manufacturing operations may become data-driven with prior knowledge support, mainly enabled by cloud computing and big data analytics.

• In 20 years, numerous small and medium-sized enterprises (SMEs) may gain a competitive edge in the global market by being powered by cloud manufacturing and made available to all.

Nevertheless, complexity and uncertainty will remain major challenges in manufacturing in the years to come. AI and machine learning can provide opportunities to relax or even resolve these challenges to a large extent. For example, deep learning can be used to better understand the manufacturing context and more accurately predict a future problem or failure in a manufacturing process before it happens, thus leading to defect-free manufacturing.

Safe HRC is another challenge in the progression toward intelligent and flexible automation that includes humans in the loop. Such collaboration is useful and necessary, especially in manufacturing assembly operations, where deep learning can help make robots intelligent enough to assist human operators while providing much-improved context awareness toward absolute human safety.

Finally, cybersecurity and new business models must be adequately addressed before intelligent manufacturing can be put into practice in the factories of the future.

京公网安备 11010502051620号

京公网安备 11010502051620号