《1. Introduction》

1. Introduction

The history of artificial intelligence (AI) clearly reveals the connections between brain science and AI. Many pioneer AI scientists are also brain scientists. The neural connections in the human brain that were discovered using microscopes inspired the artificial neural network [1]. The brain’s convolution property and multilayer structure, which were discovered using electronic detectors, inspired the convolutional neural network and deep learning [2,3]. The attention mechanism that was discovered using a positron emission tomography (PET) imaging system inspired the attention module [4]. The working memory that was discovered from functional magnetic resonance imaging (fMRI) results inspired the memory module in machine learning models that led to the development of long short-term memory (LSTM) [5]. The changes in the spine that occur during learning, which were discovered using two-photon imaging systems, inspired the elastic weight consolidation (EWC) model for continual learning [6]. Although the AI community and the brain science community currently appear to be unconnected, the results from brain science reveal important issues related to the principles of intelligence, which lead to significant theoretical and technological breakthroughs in AI. We are now in the deep learning era, which was directly inspired by brain science. It can be seen that the increasing research findings in brain science can inspire new deep learning modes. Furthermore, the next breakthrough in AI is likely to come from brain science.

《2. AI inspired by brain science》

2. AI inspired by brain science

The goal of AI is to investigate theories and develop computer systems that are able to conduct tasks that require biological or human intelligence, with functions such as perceptrons, recognition, decision-making, and control [7]. Conversely, the goal of brain science, which is also termed neuroscience, is to study the structures, functions, and operating mechanisms of biological brains, such as how the brain processes information, makes decisions, and interacts with the environment [8]. It is easy to see that AI can be regarded as the simulation of brain intelligence. Therefore, a straightforward way to develop AI is to combine it with brain science and related fields, such as cognition science and psychology. In fact, many pioneers of AI, such as Alan Turing [9], Marvin Minsky and Seymour Papert [10], John McCarthy [11], and Geoffrey Hinton [12], were interested in both fields and contributed a great deal to AI thanks to their solid backgrounds in brain science.

Research on AI began directly after the emergence of modern computers, with the goal of building intelligent “thinking”machines. Since the birth of AI, there have been interactions between it and brain science. At the beginning of the 20th century, researchers were able to observe the connections between neurons in the neural system, including brains, due to the development of microscopy. Inspired by the connections between neurons, computer scientists developed the artificial neural network, which is one of the earliest and most successful models in the history of AI. In 1949, Hebbian learning was proposed [1]. This is one of the oldest learning algorithms. Hebbian learning was directly inspired by the dynamics of biological neural systems. In particular, based on the observation that a synapse between two neurons is strengthened when the neurons on either side of the synapse (input and output) have highly correlated outputs, the Hebbian learning algorithm increases the connection weight between two neurons if they are highly correlated. After this development, artificial neural networks received considerable research attention from researchers. A representative work was the perceptron [13], which directly modeled the information storage and organization in the brain. The perceptron is a single-layer artificial neural network with a multidimensional input, which laid the foundation for the multilayer network.

In 1959, Hubel and Wiesel [14]—the recipients of the 1981 Nobel Prize in Physiology or Medicine—utilized electronic signal detectors to capture the responses of neurons when a visual system saw different images. The single-cell recordings from the mammalian visual cortex revealed how visual inputs are filtered and pooled in simple and complex cells in the V1 area. This research demonstrated that the visual processing system in the brain conducted convolutional operations and had a multilayered structure. It indicated that biological systems utilized successive layers with nonlinear computations to transform raw visual inputs into an increasingly complex set of features, thereby making the vision system invariant to the transformations, such as pose and scale, in the visual inputs during the recognition task. These observations directly inspired the convolutional neural network [2,3], which was the fundamental model for the recent, ground-breaking deep learning technique [15]. Another key component of artificial neural networks and deep learning is the back-propagation algorithm [16], which addresses the problem of how to tune the parameters or weights in a network. Interestingly, the basic idea of back propagation was first proposed in the 1980s by neuroscientists and cognitive scientists [17], rather than by computer scientists or machine learning researchers. The scientists observed that the microstructures of neural systems and the neural system of the biological brain were gradually tuned using a learning procedure with the purpose of minimizing the error and maximizing the reward of the output. The attention mechanism was first introduced in the 1890s as a psychological concept, and was designed such that an intelligent agent selectively concentrated on certain important parts of the information—instead of concentrating on all of the information—in order to improve the cognition process [4]. In the 1990s, studies began using new medical imaging technologies, such as PET, to investigate the attention mechanism in the brain. In 1999, PET was utilized to study selective attention in the brain [18]. Then, using other imaging technologies, researchers discovered more about the attention mechanism in a biological brain [19]. Inspired by the attention mechanism in a biological brain, AI researchers began incorporating attention modules into artificial neural networks in temporal [20] or spatial [21] ways, which improved the performance of deep neural networks for natural language processing and computer vision, respectively. With an attention module, the network is able to selectively focus on important objects or words and ignore irrelevant ones, thereby making the training and inferential processes more efficient than those of a conventional deep network.

A machine learning model usually forgets the information in the data that it has processed, whereas biological intelligence is able to maintain such information for a period of time. It is believed that there is working memory in a biological brain that remembers past data. The concept of working memory was first introduced in the 1970s and was summarized from cognition experiments [22,23]. Since 1990, researchers have used PET and fMRI to study the working memory in biological brains, and have found that the prefrontal cortex in the brain is a key part [24–26]. Inspired by the working memory research from brain science, AI researchers have attempted to incorporate a memory module into machine learning models. One representative method is LSTM [5], which laid the foundation for many sequential processing tasks, such as natural language processing, video understanding, and time-series analysis. A recent study also showed that with a working memory module, a model can perform complicated reasoning and inference tasks, such as finding the shortest path between specific points and inferring the missing links in randomly generated graphs [27]. By remembering previous knowledge, it is also possible to perform one-shot learning, which requires just a few labeled samples to learn a new concept [28].

Continual learning is a basic skill in biological intelligence that is used to learn a new task without forgetting previous ones. How a biological neural system learns multiple tasks at different times is a challenging research topic. In 1990, the two-photon microscopy technique [29] made it possible to observe the in vivo structures and functions of dendritic spines during learning at the spatial scale of single synapses [30]. With this imaging system, researchers in the 2010s studied neocortical plasticity in the brain during continual learning. The results revealed how neural systems remember previous tasks when learning new tasks by controlling the growth of neurons [31]. Inspired by the observation of biological neural systems, a learning algorithm termed EWC was proposed for deep neural networks. This algorithm controlled the changes in the network parameters when learning a new task, such that older knowledge was preserved, thereby making continual learning in deep learning possible [6].

Reinforcement learning (RL) is a widely used machine learning framework that has been utilized in many applications, such as AlphaGo. It relates to how AI agents take action and interact with the environment. In fact, RL is also strongly related to the biological learning process [32]. One important RL method—which was also one of the earliest methods—is temporal-difference learning (TDL). TDL learns by bootstrapping from the current estimate of the value function. This strategy is similar to the concept of second-order conditioning that has been observed in animal systems [33].

《3. Brain projects》

3. Brain projects

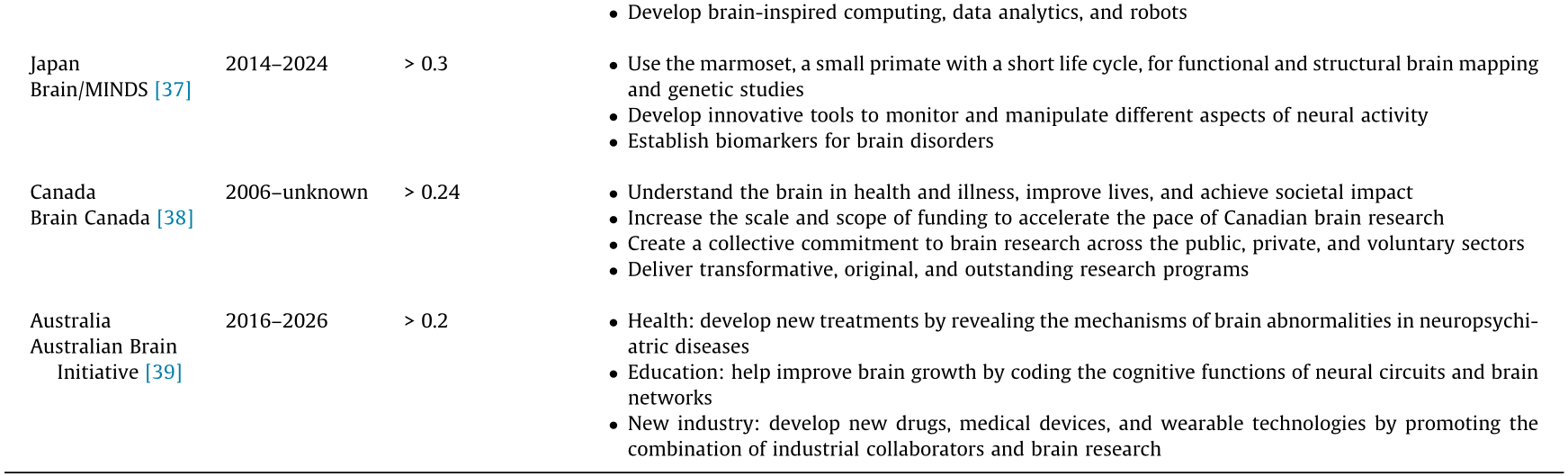

Many countries and regions have conducted projects to accelerate brain science research, as shown in Table 1 [34–39]. Despite different emphases and routes, the development of the next generation of AI based on discoveries in brain science is a common objective of all brain research projects. Governments and most scientists seem to have reached a consensus that advancing neural imaging and manipulating techniques can help us explore the working principles of the brain, which will allow us to design a better AI architecture, including both hardware and software. During such studies, mutual collaboration between multiple disciplines including biology, physics, informatics, and chemistry are necessary to enable new discoveries in different aspects.

《Table 1》

Table 1 Overview of brain science research projects around the world.

During the past five years, important achievements in brain research have been made with the support of brain research projects. The development of optogenetics has made it possible to manipulate neural activities at a single-cell resolution [40]. Large-scale manipulation can be further accomplished using advanced beam-modulation techniques [41,42]. In the meantime, various methods have been proposed to record large-scale neural activities in three dimensions (3D) [43–45]. The number of neurons that can be recorded at the same time has increased rapidly from tens to thousands, and may be increased to millions in the near future with the increasing technological developments in wide-field high-resolution imaging [46–48]. Such significant improvements in the field of neurophotonics provide a basis for important discoveries in neuroscience [49,50]. For example, the emphasis in the BRAIN Initiative will be gradually moved to discovery-driven science.

One typical case in the BRAIN Initiative, which aims to revolutionize machine learning through neuroscience, is machine intelligence from cortical networks (MICrONS). With serial-section electron microscopy, complicated neural structures can be reconstructed in 3D at unprecedented resolutions [51]. In combination with high-throughput data analysis techniques for multiscale data [52,53], novel scientific questions can be developed to explore fundamental neuroscience problems [54]. With this improved understanding, researchers have proposed novel architectures for deep neural networks, and have tried to understand the working principles of current architectures [55,56]. In addition, the current deep learning techniques can help to accelerate the massive amount of data processing that is necessary in such research, thus forming a virtuous circle.

Thanks to technological developments in recent years, it is now possible to observe neural activities in a systematic view at unprecedented spatial–temporal resolutions. Many large-scale data analysis techniques have been proposed in the meantime to solve the challenges that result from the massive amount of data produced by such technologies. Following this route, various brain projects can exponentially accelerate brain research. By achieving an increasing number of discoveries, we can develop a better picture of the human brain. There is no doubt that the working principles of the brain will inspire the design of the next generation of AI, just as past discoveries in brain research have inspired today’s AI achievements.

《4. Instrumental bridges between brain science and AI》

4. Instrumental bridges between brain science and AI

Instrumental observations of the brain have made enormous contributions to the emergence and advancement of AI. Modern neurobiology started from the information acquisition of microstructures across the subcellular to tissue levels, and benefited from the inventions of microscopy and the biased staining of substances in cells and tissues. The renowned neuroanatomist Santiago Ramón y Cajal was the first to use Golgi staining to observe a large number of tissue specimens of the nervous system, and put forward the fundamental theories on neurons and neural signal transduction. Cajal and Golgi shared the Nobel Prize in Physiology or Medicine in 1906. Cajal is now widely known as the father of modern neurobiology.

Our ever-growing understanding of the human brain has benefitted from countless advances in neurotechnology, including the manipulation, processing, and information acquisition of neurons, neural systems, and brains; and cognitive and behavioral learning. Among these advances, the development of new technologies and instruments for high-quality imaging acquisition has been the focus of the past era and is expected to attract the most attention in the future. For example, the BRAIN Initiative, which was launched in the United States in 2013, aims to map dynamic brain images that exhibit the rapid and complex interactions between brain cells and their surrounding nerve circuits, and to unveil the multidimensional intertwined relationships between neural organizations and brain functions. Such advances are also expected to make it possible for us to understand the processes of recording, processing, applying, storing, and retrieving large amounts of information in the brain. In 2017, the BRAIN Initiative sponsored a number of interdisciplinary scientists at Harvard, who undertook to research the understanding of the relationship between neural circuits and behavior, mainly by acquiring and processing large datasets of neural systems under various conditions using highquality imaging.

Traditional neuroscience research mostly uses electrophysiological methods, such as the use of metal electrodes for nerve excitation and signal acquisition, which have the advantages of high sensitivity and high temporal resolution. However, electrophysiology is invasive and is not suitable for long-term observation. In addition, it has a low spatial resolution and limited expansion ability for the parallel observations that are required to extract the global neural activities at a single neuron resolution of the brain. In contrast, optical methods are noninvasive and have high spatial and temporal resolution and high sensitivity. These methods are capable of acquiring dynamic and static information from individual neurons, nerve activities, and interactions and expanding our analyses of the nervous system from the subcellular level to—potentially—the whole brain. Furthermore, optical methods have been developed as manipulating tools to control nerve activities at high spatial– temporal resolutions by using optogenetics.

It is very urgent to develop technology and instruments with large fields of view and high spatial–temporal resolutions. On the spatial scale, imaging must span from submicron synapses and neurons that are tens of microns in size to brains that are a few millimeters across. On the temporal scale, the rate of frame acquisition should be higher than the response rate of the probing fluorescent proteins that are used. However, due to the intrinsic diffraction limit of optical imaging, there is an inherent contradiction among large fields of view, high resolution, and large depths of view. High-resolution imaging of single neurons or even smaller features usually cannot see brain tissue features that are larger than a few millimeters, and dynamic imaging is often accompanied by higher noise. Live and noninvasive imaging for real-time and long-term acquisition is, however, limited to the superficial layer due to tissue granules that scatter light. How to break through the above bottlenecks and realize a wide field of view, high spatiotemporal resolution, and large depth of view will be the biggest challenge of microscopic imaging in the coming decade.

It is conclusive that exploring from the microstructure dimension may lead to a new type of neurocomputing unit, whereas exploring from the macrostructure dimension in real time may enable an understanding of trans-brain operations and reveal the comprehensive decision-making mechanisms of the brain using multiple information sources (auditory, visual, olfactory, tactile, etc.) in complex environments. The binary ability of the whole brain to explore both the micro- and macro-dimensions in real time will, beyond any doubt, promote the development of the next generation of AI. Therefore, the developmental goal of a microscopic imaging instrument is to possess broader, higher, faster, and deeper imaging from pixels to voxels and from static to dynamic. Such an instrument could establish a direct link between biological macro-cognitive decision-making and the structure and function of a neural network, lay a foundation for revealing the computational essence of cognition and intelligence, and ultimately promote human self-recognition, thereby filling the research gap between AI and human intelligence.

《Acknowledgements》

Acknowledgements

This work is supported by the Consulting Research Project of the Chinese Academy of Engineering (2019-XZ-9), the National Natural Science Foundation of China (61327902), and the Beijing Municipal Science & Technology Commission (Z181100003118014).

《Compliance with ethics guidelines》

Compliance with ethics guidelines

Jingtao Fan, Lu Fang, Jiamin Wu, Yuchen Guo, and Qionghai Dai declare that they have no conflicts of interest or financial conflicts to disclose.

京公网安备 11010502051620号

京公网安备 11010502051620号