《1. Introduction》

1. Introduction

Although Society 4.0 integrates the web technology used to acquire and analyze data, without ontology [1] studies, a large gap remains between information sharing and related knowledge. Ontology studies are an essential part of Society 5.0, in which the huge amount of data, merged with environmental and human biological analysis, increases with the raising complexity of the processes required to assure a global sustainability. Moreover, realtime decision-making using big data is becoming increasingly necessary to improve companies’ competitive advantage [2], and the literature demonstrates that artificial intelligence (AI) can be applied to big data processing [3]. Big data analysis can be used to provide guidance for predictive innovations with scheduled processes [4–6].

The digital innovation results in a customer lifetime value reduction [7] and this requires flexible manufacturing systems with smart human–machine interaction technologies for non-‘‘digi tal-native” (people who are not familiar with the digital systems) human resources (HR) [8].

Although these problems have been known for some time [9], many companies are not yet ready, even today, to manage big data with smart analytical tools [10,11], especially in terms of information technology (IT) systems for production lines, where the application of business analytics may seem obvious.

One of the main difficulties in collecting information from automated processes lies in the innovation of production systems, which must respond to market changes by being reconfigured through the integration of new production machines or the adoption of technologies developed by different manufacturers [12]. For example, to reduce the time between an order and the ensuing product shipment [13], mass customization requires fast production with high-quality standards and the implementation of smart systems [6,14,15] between physical space and cyberspace. The analysis of HR habits is mandatory in order to identify userfriendly procedures to assure a quick machine reconfiguration.

In this paper, we propose a new method for managing the lifecycles of highly customized products without specialized training for non-digital-native HR [16–18]. Our method applies a standard protocol to different maintenance activities [19] evaluating the smart infrastructure design of human–cyber–physical systems (HCPSs).

We applied our method to the production lines of 12 beverage companies (where the production line was installed with an advanced diagnostics (AD) system). These companies were chosen because a change in bottle format does not require difficult software (SW) customization, which reduced the variability and allowed us to focus on the analysis of human activity. The main question addressed in this work is: How can we develop a smart system for the connection between cyberspace and physical space that is not related to HR training and that is based on customized scheduling in order to manage autonomously a huge amount of data with smart infrastructure and applications, thus reducing failures and downtimes? We demonstrate herein that our method makes it possible to reduce HR training and improve productivity.

《2. Background》

2. Background

The information and communications technology applied in the manufacturing industry is leading to highly automated, efficient, flexible, and intelligent production systems [20]. These systems require an effective organization of maintenance which can be obtained with engineering approaches [21], designing cybernetic systems with engineering tools and methods, e.g. those used for digitalization, networking, and AI. More and more companies are employing advanced production systems to maintain their competitive advantage with the use of computer-integrated manufacturing [22]. With this advanced approach, which is used to obtain excellent results in terms of low-cost automated production with high-quality standards, it is possible to design an empirical test for smart organizations.

In this context, it is possible to implement a complete procedure that defines the process parameters based on HR experience and statistical data, in which empirical cases enable node identification in the implemented artificial neural network (common variables related with different processes), defining the cyberspace region of interest (ROI) as an effective option to scale up to Industry 4.0 [14]. Considering a generic process, information utilized to develop new training lessons for HR to face not normal operating condition (e.g. a machine fault in a manufacturing process) need to be updated with data related to the restoration intervention, as well as analyzed and elaborated by AI algorithm to ensure a continuous improvement of the system.

Our proposed approach is based on the following five postulates:

(1) A smart society evaluates human needs through AI to implement the best automated operational processes, taking into account problems and stimuli in a humane way, with a structure that can collect and learn people’s habits in the modern digital context.

(2) The automated procedure applied in a specific manufacturing process must improve itself by acquiring empirical information (from real cases), in order to prevent any errors resulting from interactions between humans and machines, to ensure the safety of the provided facilities and to merge operative processes with the smart factory and with global needs.

(3) The analysis of the big data chain can be carried out with a scalable approach to adapt it to different organizations processes with AD techniques.

(4) Smart infrastructure and applications can be used to automate all management processes up to decision-making and collaborative robot design, thanks to the advancements in AI.

(5) Processes can be autonomously managed by smart selflearning systems, with a human–cyberspace connection through digital applications (SW equipped with a user interface).

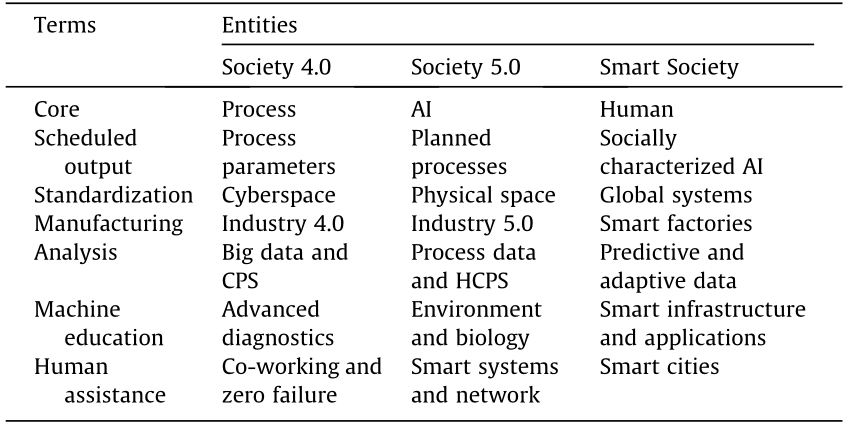

Table 1 summarizes the terminology typically used in the various paradigms of society. Depending on the paradigm, a noun corresponds to different entities. The following three subsections provide an explanation of the postulates related to different societies.

《Table 1》

Table 1 Terms and their related entities of society paradigms.

CPS: cyber–physical system.

《2.1. Society 4.0》

2.1. Society 4.0

In Society 4.0, cross-sectional sharing of knowledge and information is difficult, and people access a cloud service (databases) in cyberspace via the Internet to retrieve and analyze data. The goal of Industry 4.0, which is based on the concepts and technologies of cyber–physical systems (CPS), the Internet of Things (IoT), and the Internet of Services [23], is to reduce the gap between humans and machines, thus making it possible to easily implement digital frameworks for human needs (e.g. a networked health system) with uniformly distributed components [24]. In the presented context, human–machine cooperation has reflected a wide range of changes with broad implications in communication, coordination, and collaboration [25], particularly in regards to the implementation of collaborative robots that must respond in a very short time with flexible configuration and adaptation in any operative process.

《2.2. Society 5.0》

2.2. Society 5.0

In Society 5.0, AI accumulates information acquired from physical space in cyberspace, making the future analysis of process data possible, thanks to the use of HCPSs [20]. HCPSs, which are the new generation of AI, are based on ontology [1] and take into consideration every human–machine interaction. Moreover, this process brings new data to industry and society considering also environmental and human biological aspects, something that was previously impossible. The aim of Society 5.0 is to balance economic advancement with social problem-solving [26], which requires scientists to mimic the structures and processes that can be identified in the biological evolution. While Industry 4.0 focuses on adopting different digital/web-based technologies to acquire and monitor data, Industry 5.0 focuses on the geostrategic shift generated from synthetic biology [27]. The idea is to have dynamic cities that are designed around the environmental conditions, and that use bio-based products, energy, and services with the purpose of realizing zero-failure processes for AI integration in smart cities. The aim is to fit manufacturing processes perfectly with the environment and the human needs, and to continuously upgrade the process data, services, and products, along with the smart systems and correlated infrastructures [28]. Moreover, the data scheduling presented herein enables to set up artificial neural networks for decision-making, based on experience from Society 4.0 and validated parameters in the related physical space.

《2.3. Smart society》

2.3. Smart society

Smart cities represent a conceptual model of urban development that is based on human, collective, and technological capital exploitation [29]. The notion of smart cities [30] can be extended to smart society; in fact, Society 5.0, along with AI, is able to use standardized processes to evaluate human needs. Thus, a digitally enabled and knowledge-based society must work toward social, environmental, and economic sustainability [14]. Human and social capital is at the heart of developments in smart cities and smart society and requires innovative methods for predictive and adaptive processes. The aim is to design a knowledge-based economy with digital infrastructure that can work together and can enable dynamic real-time interactions between various smart city subsystems [31]. At present, the literature contains dozens of descriptions of what a smart or intelligent city is, although there is no overall agreement [32–35]. At any rate, it can be stated that smart cities are human-centric societies, in which AI selects processes with the goal of obtaining the best conditions for quality of life using smart factories equipped with smart devices (sensors/actuators), programmable logic controllers (PLCs), process management and manufacturing execution systems, enterprise resource planning SW, and HCPS. The idea is that an order can be made anywhere in the network, and the manufacturing processes can be remotely controlled [36] and reorganized with plug-andproduce technology, based on smart infrastructure and ontologies. This will allow the integration, exchange, or replacement of production equipment without specialized HR support for system reconfiguration. In fact, plug-and-produce technology allows the smart reconfiguration and interaction of smart devices connected with the PLC, which enables smart cooperation with other devices [37].

《3. Theory and method》

3. Theory and method

《3.1. Smart maintenance》

3.1. Smart maintenance

Typical maintenance methods include corrective, preventive, predictive, and proactive methods [38]. Corrective methods are unplanned and undertake correction when a random failure occurs. The other three methods are planned and evaluate the data from preventive and/or predictive analysis. Preventive maintenance, which increases the exploitation of components, evaluates statistical studies and equipment manuals with the aim of supporting the replacement procedure before faults occur. Predictive maintenance uses sensors for components analysis, data collection, and manufacturing process analysis, using historical trends to progressively reduce problems, thereby increasing the production efficiency. Proactive maintenance, which is based on an understanding of the problem and its causes, evaluates all relations between the components and fluids or lubricant, schedules each possible problem, and implements continuous improvement.

The "smart maintenance” proposed herein is a human-centric approach that evaluates the relation between new machines/ components and HR, taking into consideration human habits and the related knowledge level. The aim is the improvement of proactive maintenance, by implementing a new human–machine training approach with autonomous systems (cf. HCPSs) for AD and with zero-failure analysis progressively upgrading the cyberspace supporting the facility management.

《3.2. The proposed global standard method》

3.2. The proposed global standard method

Human habits and operational activities can differ for each manufacturing system (depending on environmental conditions, available resources, network, infrastructure, devices, etc.); accordingly, the ROI in each human–machine interaction and a proposal of the operational activity with a high potential for success in terms of quality, sustainability, and efficiency must be defined.

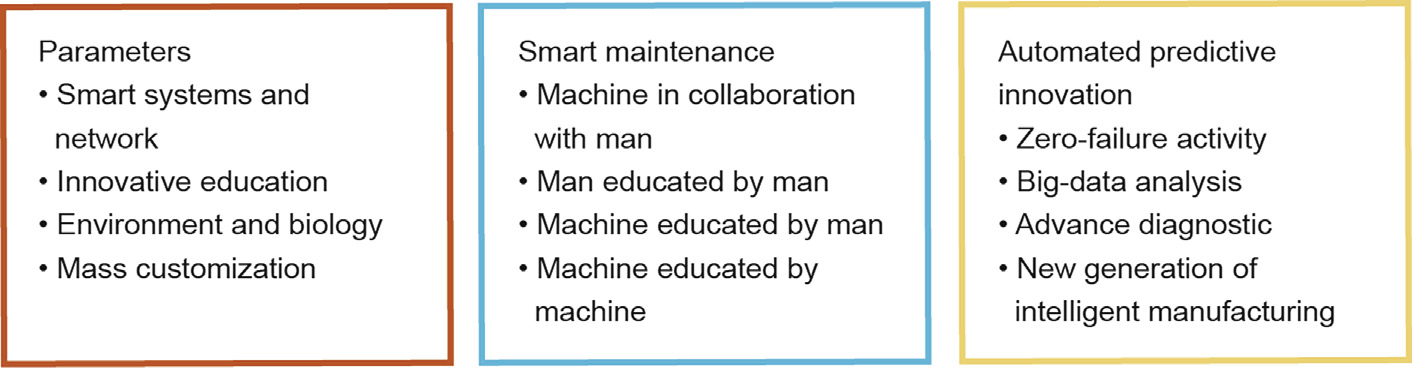

The global standard method for Society 5.0 (GSM5) proposed herein (Fig. 1) is able to improve itself over time and can optimize the operation and maintenance (O&M) process using predictive analysis.

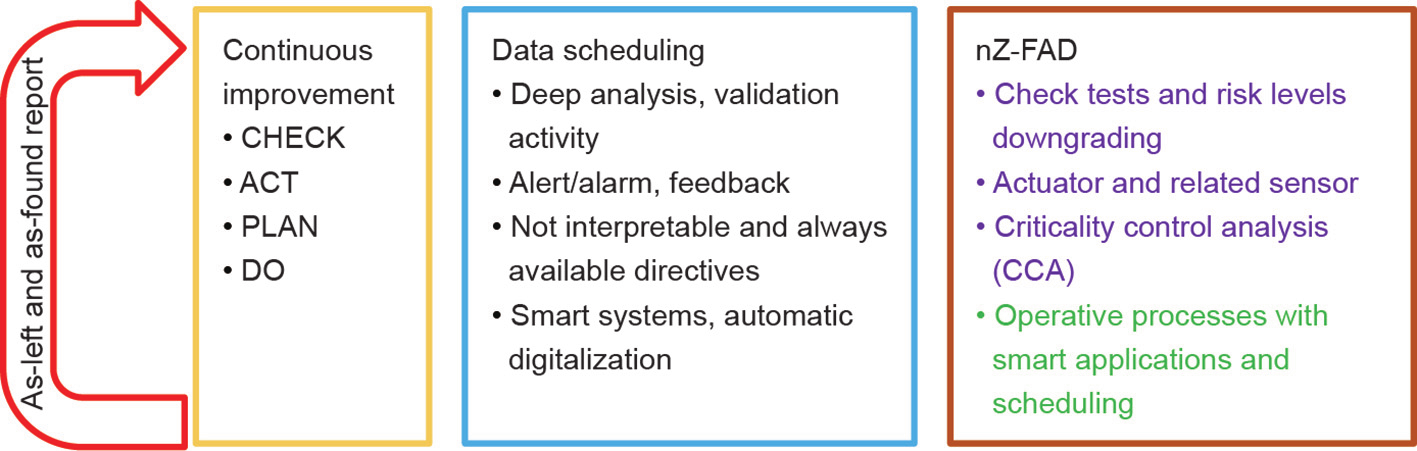

《Fig. 1》

Fig. 1. The GSM5 and the operative management approach for human–cyber–physical space. Brown box: physical space in Society 5.0: environmental resources, smart systems and networks for coherent timing definition, innovative education for operational effectiveness the roles, and mass customization targets. Blue box: smart maintenance scheduling and HCPS information data flow. Yellow box: macro areas for cyberspace scheduling of operative management.

The aim of the GSM5 is the development of production and support processes [39] to enable a multicore circular system that provides the right information at all operational management levels [40], thus ensuring complete scheduling for AI [16,41]. Dedicated smart systems, networks [42], and applications [43] provide the basis for innovative education, and analysis of the environment of a typical smart factory within Society 5.0 [44,45] is mandatory in order to enable contextualized mass customization [13].

The systems for training and scheduling (i.e., smart maintenance) are structured through the big data chain, and a process design enables the continuous improvement of a typical smart city within Society 5.0 along with cross-company activity for autonomous adaptation. The data obtained from the installed machines (i.e., from machines in collaboration with humans), are used by humans in innovative education (i.e., humans educated by humans) to predict and plan the future maintenance activity. Nowadays, the environmental problems and human needs require more and more sustainable processes and this define the available resources and manufacturing limitations (i.e., machines educated by humans), in order to ensure the global scaling (i.e., machines educated by machines) and reduce both the time spent and wasted.

The data scheduling [46] with smart applications, which starts from a new operative process implementation, evaluates the interactions between humans and machines in the related macro areas: zero failure, big data chain, AD, and new generation of intelligent manufacturing (NGIM). The structured cyberspace supports the mass customization processes with the NGIM and their zerofailure integration in the selected environment with the available biological resources. The information is shared with the smart systems and network in real time, enabling innovative education and the related data analysis and AD.

Thus, starting from future outcomes contextualized in the specific environment to obtain simple, validated, and scalable demo procedures from cyberspace to the physical space, all operative processes need a complete big data analysis for setting up and developing procedures to reduce both failures and waste.

Space is the boundless three-dimensional extent in which objects and events have relative position and direction. The human–cyber–physical space analyze both HCPS (objects and events) and the operative processes (position and direction).

The connection between cyberspace and physical space requires hardware (HW) and SW that can digitalize and elaborate, by means of HCPS through ontologies, the interaction between humans and machines. The creation of an autonomous cyberspace with the related data scheduling management process enables a simulation of the NGIM operative processes based on the parameters of Society 5.0.

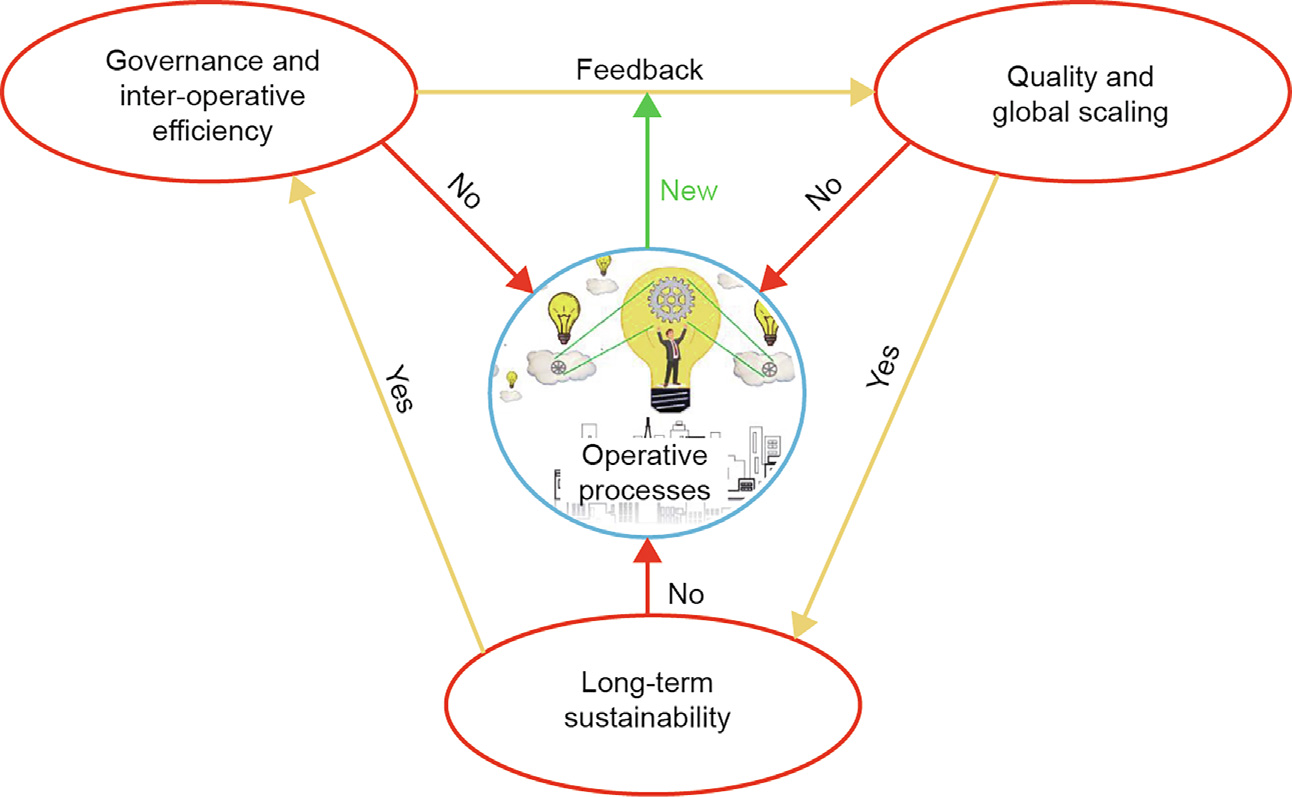

The automated predictive innovation is based on the schematic diagram in Fig. 2, which shows how this goal can be achieved. The operative processes, which support the facility management, define: goal, resources, rules, and timing with the available HW (ROI).

《Fig. 2》

Fig. 2. A predictive innovation diagram for the operative processes. Operative processes: deep learning and ideas. Quality and global scaling: applicability and geolocalization. Long-term sustainability: added values and the relation between benefits and costs. Governance and inter-operative efficiency: real applicability and management directive. New: there is a problem, and the possible solution (technology, scheduling phases, and cyberspace scheduling) has to be tested. Continuous improvement data flow (yellow and red arrows): Yes: the new process improves the state of the art; Feedback: cyberspace scheduling; No: the proposed solution does not respect the guidelines.

The operative processes require human customization through the implementation of empirical demo cases, in order to point out inefficiencies or changes that should be made in the human– machine interaction. Starting from an evaluation of the operational processes, which can reduce the number of problems, and ensure efficiency, high quality, and sustainability, the whole big data chain must be explored for any specific environment and related resources (e.g., the e-business is sustainable and reduces waste, but the internet has to be available). Thus, it is necessary to customize the functional AD and the smart applications that are used to provide feedback for feasibility and zero failure, merging human habits with the production performance data, and identifying the available choices in terms of the probability of the mission’s success (e.g., waste reduction, increase of production). Moreover, the circulating system must be able to improve autonomously, by identifying the actions that must be taken into account in order for predictive innovation to occur. This is done by using the digital structure of the cyberspace, where the big data outputs enable the AD, while the zero-failure tracking enables the feasibility analysis. The corporate governance generate feedback that is useful for pursuing process quality, and the potential for global scaling and the sustainability are evaluated from economic, environmental, and social perspectives. At each step, the target can be missed, and a revision of the operative processes may be needed to improve the system and restore the desired loop. Management levels (strategic, tactical, and operational) that implement continuous improvement will analyze and store the empirical results, making it possible to create operative tutorials for fixing machine failures. Moreover, the machine history is needed to allow the artificial neural network (nodes) to obtain increasingly accurate predictions of failures and to plan maintenance, in order to reduce interventions and unexpected/unwanted downtimes as much as possible (i.e., ideally, to reduce interventions to near zero), aside from the annual planned maintenance. Thus, the smart maintenance system proposes appropriate service, and the feedback of the product management generates a customized AD algorithm. The efficiency of this approach is continuously updated with near-zero-failure advanced diagnostic (nZ-FAD) processes that ensure continuous improvement.

Our goal is to obtain an automatic self-restoring process. Therefore, we take the example of building information modeling (BIM) processes, in order to design a "smart” protocol for smart factories, Society 5.0, Industry 5.0, and smart cities. This allows us to explore some empirical cases that can provide efficiency feedback for AI raw data and scheduling design.

《4. Information data flow: Management and operative processes》

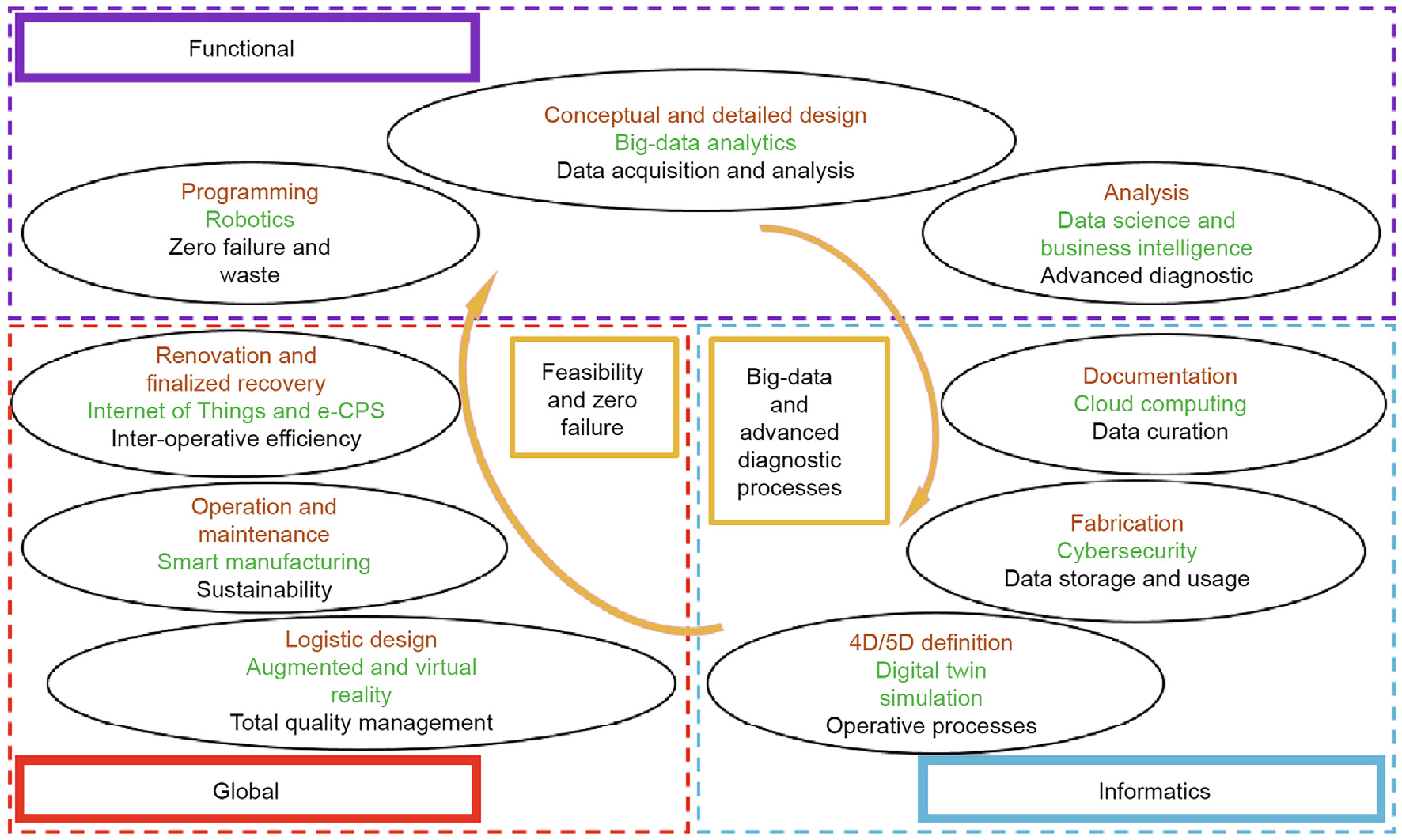

4. Information data flow: Management and operative processes

In this study, in order to collect the data in a smart way, we decided to refer to a logical model that is commonly used for big data processing and management [47], which integrates all the aforementioned technologies. Data analysis highlights the result of previous experiences in combination with the simulation methods [48]. The structure aims to correlate the long-term algorithms, identifies the enabling factors, and evaluates their impact on costs, risks, and operative processes (Fig. 3). The cyber–physical space and the related scheduling (Fig. 4) must be updated to ensure efficiency, quality, and sustainability through a user-friendly system [49]; information and notifications must be continually available, confidential, and complete.

《Fig. 3》

Fig. 3. Functional process management and its operative flow diagram.

《Fig. 4》

Fig. 4. Operative macro areas in cyber–physical space (functional, informatics, and global) and system information scheduling for automated predictive innovation. Orange: BIM; green: Industry 4.0 technology; black: scheduling phases; yellow: cyberspace scheduling.

The target is to achieve continual automated innovation of the operational processes, which will establish a basis for appropriate supply chain management [50] with predictive algorithms [51]. This case study will make it possible to explore the concept of BIM, which involves the creation of a digital representation of the physical and functional characteristics of a structure. BIM involves the generation and management of digital representations of the physical and functional characteristics of areas in several dimensions [52]. In this context, Industry 4.0 provides all the required technology to support human–machine interaction, with the functional aim of reducing costs and increasing the control of automated processes. At this point, it is essential to identify the operative macro areas for scheduling and correlated phases.

The operators and agents in the functional area customizes the AD in order to acquire sufficient data volume and quality to ensure zero failure and zero waste. The part of the AI system that deals with informatics must ensure feasibility by providing all the necessary data for the prediction and simulation of the operative processes. Specialists define the global area directives, which start from the operative processes, describing the quality, sustainability, and feasibility, which are scalable in any global context, for future AI training and data scheduling. After that, it is possible to connect the physical space with the cyberspace to acquire the data process.

《4.1. Dataset and alert/alarm messages》

4.1. Dataset and alert/alarm messages

Optimized maintenance is essential to improve performance [53–56]; in the manufacturing systems, alert and/or alarm messages are used to connect the cyberspace and physical space and to show what is needed to improve the AD (Fig. 5).

《Fig. 5》

Fig. 5. Continuous improvement (yellow box and red arrow) data scheduling design (blue box) for zero-failure operative processes and cross-sectional analysis (brown box). CHECK: mobile application for the zero-failure data management. ACT: digital library and devices for problems and the display and analysis of downtime events. PLAN: cloudbased structure for product/service long-term management and real-time digital assistance. DO: automated production lines with digital assistance and operative processes validation. Violet bullets: micro-functional areas. Green bullet: smart maintenance operative processes.

After simulating an enormous number of real-life contexts (ACT), the AD system records the protocol number, type of failure, and its duration (PLAN), alerting the systems to undertake materials recovery and operative management. These smart systems become very useful in testing different types of smart applications (DO), and supervisor can control and evaluate the HR in real time, in order to enable continuous improvement and zero-failure procedures (CHECK). Thus, we called the proposed system nZ-FAD, shifting from "fail-and-fix” practices to a "predict-and-prevent” methodology.

The proactive maintenance approach makes it possible to detect any direct or indirect faults before they occur (i.e., prediction). When a failure trend is identified, an analysis taking care of HR habits and focusing on the interaction between humans and machines to enhance safety and comfort (i.e., ergonomics) results in the proposal of documentation and devices that are dedicated to quickly restore the normal activity of the automated manufacturing system in order to reduce or avoid forced downtimes (i.e., prevention).

In this context, a list of the following four types of machine alarms was classified for criticality control analysis (CCA): warning, inspector, operator, and supervisor alarms. For the zero-failure process, the system identifies the alarm according to the critical problem, and sends out notifications or pictures (of the as-left or as-found component) to inform the HR about the condition of the machine. Once the parts involved in the failure have been identified, the actuator can be downgraded to a normal level, as can the sensor that gave the failure alarm.

《4.2. Process data flow: Functionalization and customization》

4.2. Process data flow: Functionalization and customization

The schematic diagram in Fig. 6 shows the architecture of the IT system applied to the proposed method. The informatics systems are based on a Windows application that stores and processes data from the production line, monitored by sensors connected to a PLC.

《Fig. 6》

Fig. 6. System architecture for the O&M optimization of production with HCPS. Green box: global target identification; blue box: available resources for the informatics improvement activity; brown box: roles; yellow box: improvement phases for timing and cyberspace scheduling.

When an alarm occurs, the applications gather the information from the machine’s PLC, through human–machine interface (HMI)- encrypted notifications (.txt format), and provide them to the user. The information transferred by the notifications is summarized in the alert/alarm message through a functional protocol named: hardware-component identification protocol (H-CIP) which reports the human–machine interaction [57], the time between the fault and the intervention, and the identification of the possible risks. The data available on the HMI are stored in a dedicated cloud [58], which shares the information (e.g., HW nodes configuration, node or component, and module) with the IT system (e.g., mobile application for maintenance, management SW, etc.).

The operation center defines the targets and the resources with the aim of developing the nZ-FAD based on long-term data analysis for advanced product/service control. The selected parameters will determine the NGIM performance and the related roles and timing, based on the mission and the typical use of the delocalized automated machine. To meet the requirements for O&M optimization, two ad hoc Android applications (supervisor application (SVA) and maintainer application (MNA)) were developed using the MIT App Inventor 2 SW; this allowed the quick and reliable development of human interface applications without needing to pay for the installation and/or a distribution service on the HW (e.g., smartphones and tablets). The database collects all components and the related criticality analysis data from the delocalized machine and the management levels, working bilaterally with the support system to define the operative procedure that is useful in the SW design. Thus, all type of problems and procedures are analyzed with the aim of implementing the functional HR directive.

The Industry 5.0 context allows easier and more effective customization of the digital systems dedicated to data gathering, management, and tools for assisted decisions. Once the Android application for the supervisor, which is named SVA, receives an alarm, it asks to take a picture of the machine or part that is failing, and records notes describing the state of the machine and the environment (e.g., workplace safety hazards), providing a point of view based on its previews experiences. The information acquired by the supervisor at this stage is processed by the application to evaluate how the machine can be repaired, following the instructions called one-point lessons (OPLs). The OPLs merge the component information elaborated from the operator via the related identification protocol with the analysis performed by the inspector alarm, in order to try to reduce the failure risks involved in implementing the continuous improvement between the sensors and actuators. When a fault occurs, the AD algorithm displays the alert on the MNA and supports the maintenance activity step by step with the OPL in order to reduce outsource support. Moreover, the AD takes into account the necessary skills (i.e., mechanical, electrical, electronic, SW, etc.) to organize the collaborators, with ranks assigned based on availability and some significant parameters (e.g., the time for the repair and the guaranteed quality). The AD also considers human experience and runs an artificial neural routine (a trend analysis based on the historical data of real cases to obtain mission success probability) to choose the maintainers (i.e., the best suited specialized technicians from the list of those available in the company) to whom the repair will be assigned. A supervisor manages the restoration activities, and the system support assists him during these phases.

When the supervisor ends this description and selection step, a request with photos and notes is sent to the chosen maintainer, who carries out the maintenance while having access to the alarms stored in the cloud using the MNA Android application. Once the correct number of validated operative processes is acquired, the collaborative robots can be progressively improved for automated maintenance activity.

《5. Data evaluation approach (big data value chain)》

5. Data evaluation approach (big data value chain)

In order to implement the nZ-FAD for automatic O&M, a preliminary analysis was carried out following the well-known big data value chain analysis [59].

《5.1. Data acquisition》

5.1. Data acquisition

The implementation of flexible and modular systems requires the data scheduling standardization. Our aim was to provide a data structure (library) related to the machine messages of interest, which must be defined in order to better explain the data collection for the next uses. The HMI recording and display related to the production line components were developed for two macro areas: H-CIP and CCA.

The database was developed by analyzing each HW and SW device, digitalizing the related functional code (cf. H-CIP) based on the typical questions addressed in the maintenance: "What has happened?” and "What/How to do?”. The H-CIP shows "What has happened?” on the production line, answering to: "Where is the problem?” for a fast geo-localization, "Why has it happened?” considering factors related to the HW and its related risks, and "Who can restore it?” with all additional information on the functional use of the device and its replacement. In order to provide the necessary digital support, based on previous experiences and the CCA, skilled HR carry out a tutorial for fast component replacement. The CCA enables a quick problem evaluation, by providing the necessary information about "What to do?”, and by identifying three sub-records: the means description "Which devices and tools to use?”, the priorities "When to do it?”, and the operating activities that are necessary in order to better repair, improve, and eventually recycle the component "How to do it Well?”. In this case, the analysis focused on a suitable layout record for artificial neural networks, resulting in a model for data analysis in which a unique identity document (ID) was related to each micro-functional area linked to the related operative processes (Table 2).

《Table 2》

Table 2 The "8 Ws” (or "7 Ws 1H”) for the process areas partition of the near-zero-failure process: What has happened? Where is the problem? Why has it happened? Who can restore it? What to do? Which devices and tools to use? When to do it? How to do it Well?

Thus, we implemented a scheduling based on some basic and circumstantial human questions that, since ancient times, are very important for the development of any kind of hypotheses and demonstrations, and therefore for the problem-solving stages (finding, shaping, and solving).

In fact, human brain can be considered a processor that, once stimuli have been received (alert/alarm), finds meaning "What has happened?”, the possible causes "Why has it happened?”, the related context "Where is the problem?” and, finally, gives the solution. The same approach (identification of the problem, of its causes and location, and of the person, who can implement the solution) was used for the smart maintenance operative processes to provide the basic operative instructions "What to do?” and "How to do it well?” to restore normal activity, given the type of subject and its functionality "Which devices and tools to use?” and the importance of the problem "When to do it?”.

《5.2. Data analysis》

5.2. Data analysis

The objective was to make the acquired raw data amenable for use in decision-making or for other specific usages (e.g., literature references, qualitative impacts, phenomenological analysis of symptoms, risk prevention, recommended renovation activities, operating impacts, and preferential disposal processes). Each error string was matched with a unique ID, and a dedicated file was created containing the instructions necessary to solve the fault that caused the alarm. The main goal was to provide—at the operator level—a method to restart each inactive machine in failure without special interventions requiring dedicated HR. This will reduce plant downtimes, the expenses of unsold products, and the expenses for human activities (which have variable durations that are difficult to determine). For these reasons, a guide for each problem was created. In other words, the data analysis leads to the writing of an OPL for each kind of fault, in such a way that the operators working on the machine can use it efficiently when the fault occurs.

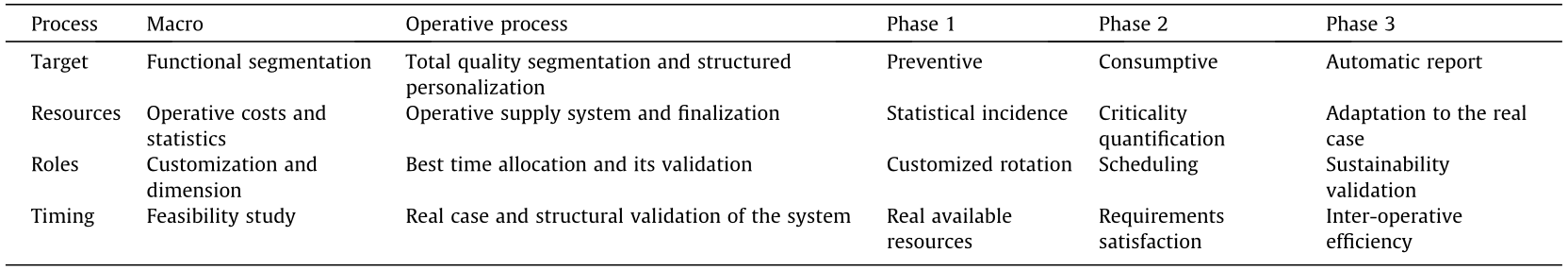

With the aim of functionalizing the method in a specific environment, considering that people have different types of habits [60], high inter-operative efficiency scheduling is mandatory. An example of data analysis implementation is shown in Table 3.

《Table 3》

Table 3 Data analysis diagram for high interoperability efficiency and related phases.

The process is progressively structured through a dedicated macro, starting from the definition of the target and the evaluation of the available resources, up to the identification of the key roles and the correct timing. In this way, it will be possible to start the simulation and test phases (Phases 1 and 2, respectively), in order to plan the automation of the operational process (Phase 3).

《5.3. Data curation, storage, and usage》

5.3. Data curation, storage, and usage

The data mainly need to be processed to avoid duplications, in addition to other actions such as selection, classification, and validation [61]. All of these actions ensure that the data are trustworthy, discoverable, accessible, reusable, and appropriate for the purpose. However, access to all data at different industrial levels typically remains environmentally dependent [62].

The solution adopted here is ready to use and involves a public cloud computing platform that ensures interoperability, scalability, and flexibility [63]. This choice has been made because a shared public cloud can lower its cost, even if an expensive redundant storage system is needed to ensure a reliable service designed also for disaster recovery (i.e., a service capable to avoid manmade and natural catastrophic failures from causing expensive disruptions of the system). This study aims to establish a basis for future implementations that can manage all types of alarms without the intervention of external HR [64], thereby reducing downtimes.

Cloud computing and storage technologies have been increasingly developed over recent years [10]. According to the National Institute of Standards and Technology, a cloud computing system is defined as containing five essential features: on-demand selfservice, wide access to the network, the pooling of resources, rapid elasticity, and measured service. A service provider of this network can store large amounts of data and can perform computing efficiently using the data [65]. When a stand-alone system is combined with cloud technology, even with limited computing resources and a small storage capacity, the performance and capabilities are significantly expanded, creating a network that provides a shared service for multiple users. The first step in controlling product quality and equipment through a combination of cloud capacity, the IoT, middleware, and big data [66] is to define the data storage technology of the machines connected to the network. In this work, we take advantage of an external cloud storage, which can communicate with the machines present in the industrial testing case and/or with the different IT components (i.e., HMI, personal computers, tablets, local hard disks, etc.). Whenever a failure occurs, the PLC sends an alarm message to the HMI, which sends a text file to a dedicated cloud in order to enrich a historical database. The AD records all the data that have been recorded by sensors or computed in a local hard disk with a dedicated function called "Alarms Historical Management,” and saves the alarms on the cloud to ensure data protection. The alarms can also be sent via e-mail to all operators involved in maintenance activities.

The final database is utilized by the industrial machines for optimal management. The collected data can also be used by organizations for business activities and/or to improve the effectiveness of their actions with measurement models typically developed by data analytics [11,67]. With a smart automated decision-making process, companies’ competitiveness can be strengthened in terms of cost reduction, value added, or any other measurable parameter [68]. Implementation of the AD combines the operator alarms with operator alarms with troubleshooting that adopts the OPL format and the near-zero failure model. It is functionalized for industrial environments, restores the supervision procedure, and improves the images and text that are sent to the involved HR.

We have developed the Mobile Application for Maintenance Tracking and Monitoring (MAM-TAM) for Android devices, which has direct access to the cloud through the authentication and display of text files, images, and PDF documents of the OPL linked to an alarm code, and can be used to retrieve data from both external and local memories. A similar application for Windows rugged tablets has been implemented for industrial applications. Through the MAM-TAM, the system is monitored in real time. In this way, each dedicated HR can perform supervision, and can alert other operators about the presence of different risk conditions. The capability to collect real-time information at all levels, without changing the management mechanism of the automated production process, makes it possible to keep the security level unchanged [10], without requiring the use of the HMI memory.

《6. Results》

6. Results

The method presented here has been applied in 12 companies during the last four years. The obtained improvements have been evaluated, with a focus on the reduction of downtimes, training activities, failures, and outsource support, and the increase of production. As previously described, we selected the production lines of 12 beverage companies because changes in bottle format do not require difficult SW customization. Thus, this choice reduced the variability and allowed us to focus on human activity analysis. Moreover, they represented different sites around the world and had different turnovers (three are among the ten largest food and beverage companies worldwide). We applied dedicated OPL for smart assistance in all of the enterprises, taking into consideration incorrect operations implemented by humans and machines. Table 4 shows the results obtained over the period (from 12 to 38 months) of nZ-FAD adoption.

《Table 4》

Table 4 nZ-FAD application time and obtained results in 12 different global companies, with a focus on the reduction of downtimes, failures, training costs, and outsource services, the increase of production, and the number of downtime events after the application of the implemented method.

The smart assistant, which included well-written OPLs, reduced the downtimes by (23 ± 6)%, no temporal correlation was measured (R2 = 0.163), and displayed a reduction in failures of (9 ± 3)%, compared with the same number of months before the implementation of the proposed method.

We also noticed a reduction in training costs for internal personnel of (36 ± 16)%, in comparison with the same time interval before and after the machine purchase. Both failure and training cost reductions were not correlated to the time of the protocol application (R2 = 0.552 and R2 = 0.071, respectively). In order to determine whether the digital assistance system worked correctly, we also evaluated whether requests for outsource services were reduced. In fact, the measured value was not related to the nZFAD application time (R2 = 0.400), even though there was a reduction of (35 ± 13)%. This finding demonstrates that the reduction in failures and downtimes was not related to HR training. The production increased by (19 ± 3)%, although there was no historical correlation (R2 = 0.144), while the number of downtime events decreased by 3504 ± 1250 events and displayed a linear correlation with the nZ-FAD application time (R2 = 0.927). Normally, careful planning results in a positive linear relationship between months and the number of interventions, or the number of interventions generally tends to increase with the age of the machine. The continuous improvement system must interact with the progressive reduction of maintenance for all degrees of severity. The relationship between the number of downtime events and the time of nZ-FAD application, over a period of 12–38 months, was a logarithmic curve, which tended toward a potential average of 100 interventions per month after six years (Fig. 7).

《Fig. 7》

Fig. 7. Downtime events trend over a period of 12–38 months.

In summary, the presented smart system supports human activities; reduces downtimes, failures, and training costs; and increases productivity. The smart maintenance reduces the amount of after-sales assistance and causes outsource maintenance activities to tend toward a near-zero value through the continuous improvement of the digital platform, thanks to a global management approach to the connection between cyberspace and physical space, big data analysis, and smart applications design.

《7. Discussion》

7. Discussion

The adoption of the described methods generates a self-learning process, in which it will no longer be necessary to train personnel; rather, it will be enough to follow the OPLs when needed. In fact, it was demonstrated in the experimental results that the reduction of failures, downtimes events, and related costs were not related to the dedicated HR training, but came from the scheduling and machine learning.

This method was inspired by the vision of Society 5.0, which relates physical space to cyberspace [69]. In Society 5.0, the environment defines the global ROI for maximum efficiency using smart systems [70], and innovative approaches are used to manage mass customization [71]. Applying the advanced computing technology to the statistical analysis with extensive data collection the system became able to reduce the standard deviation of the customization and the restoring activities. The presented approach addresses the paradigm shift in modern maintenance systems from "predict-and-prevent” practices to an "analyze the root cause and be proactive” methodology.

To develop the proposed continuous improvement system in a smart society, it is necessary to:

• Define the human–cyber–physical space for a human-centric society;

• Implement an inter-operative structure for self-learning;

• Functionalize the Industry 4.0 structure;

• Design the network with smart systems in line with the aim of continuous improvement;

• Design the operative and HW structures for physical space and cyberspace management;

• Establish functional scheduling for the customization of processes and the future implementation of AIs.

In this framework, automatic data collection is mandatory in order to define the big data chain using all available tools, and to identify the HR needed in the process. Using highly efficient personalized devices, which can self-improve over time through nZFAD systems, the proposed method can be considered as a general management approach for any type of organization.

The GSM5 method automates part of the decision-making processes and increases the interoperability efficiency, providing functional analysis of processes, which leads to the development of SW tools for custom AD. The processes are connected, resulting in a cycle with automatic continuous improvement at all levels, while always keeping humans in the core position. Thus, the operators accomplish their activities and perform their functions without necessarily being "digital natives” [18] or experts in IT components (e.g., using OPLs). In this way, the development of smart factories and smart organizations can be made more sustainable. Once all processes have been explored (including processes within the biological and green sectors, such as those related to environmental impact and toxicology) it will be possible to transfer activity to AI. All these described steps make the implementation of Industry 5.0 possible.

《8. Future direction and limitations》

8. Future direction and limitations

By implementing the proposed method, it is possible to reduce the training time and costs in a technological upgrade to Industry 4.0 with the aim of improving productivity. To achieve continuous improvement of the operative process under standardization for global scaling, we started from data collection, which allowed the definition and reorganization of the SW structure; next, we customized the operative processes reducing risks and improving the throughput at the same time.

We tested the presented containment risk method to identify the appropriate questions in the maintenance approach for every change in the digital age, designing the scheduling for the implementation of AIs.

For all these reasons, we assume that, through neural networks trained to consider the human habits (character), it is possible to maximize the performance of smart systems and maintain high standards of quality, sustainability, and interoperability. Once a satisfactory amount of data has been acquired from real cases, it will be possible to implement automatic decisions with a "characterized AI” that, like a growing child, utilizes its senses to interact with systems, even when there is a reduced capacity for action. Depending on the sensors, the "characterized AI” neural network may generate messages related to different problems, very similar to the studied humans, helping HR to restore the original conditions with automated actions, albeit limited by the automatisms connected with the CPS.

Many HW implants are needed to confirm the resulted trend (at least 70 months are needed, see Fig. 7) to determine the actual number of maintenance activities and its closeness to the implemented predictive analysis.

《9. Conclusions》

9. Conclusions

This work is important for the renewal of diagnostic systems, supporting companies in aligning with Industry 5.0 through the study and scheduling of the interaction between human and technology. Within this framework, it is of great interest to establish a direct relationship between producers and machine programmers with a flexible, customizable, and easily scalable management system.

The GSM5 can be considered as a universal method for the automated management of smart maintenance activities and smart application design. The dedicated SW structure reduces failures to close to zero, interacts with maintenance and management teams for continual quality improvement, and manages the alarms of automated lines without specific training for HR. Continual access is available to operational activities through a userfriendly interface, and the digital data collection of feedback from the monitoring activities can be customized.

The results are used as input for the integrated maintenance plan management, in order to reduce spare parts, storage costs, and downtimes, as well as maintaining high product quality, in all contexts of a smart factory.

The system, which includes real-time evaluation and regulation, permits optimization of the artificial logic network and interactive updating of the processes of the organization.

The scheduling and the functionalized use of alarms as a connection between cyberspace and physical space have demonstrated their potential to reduce delay time and costs. These processes make this technology particularly suitable for alignment with the concepts of continuous improvement and multi-sectorial integration, on which Industry 4.0 is based.

The human–machine interaction activities are developed and customized ad hoc for the context under analysis, and are improved through effective scheduling, thus ensuring a reduction of deviations, no longer in line with the ideas of globalization and waste elimination.

It is essential to apply a method that is able to ensure HR teaching activity in the shortest possible time in order to reduce the risk of failures, because the most important problems do not lie in the change itself, but the rapid achievement of sustainability and wellness objectives.

As a preliminary assessment, this paper may be useful in establishing a global standard method and continuous improvement operative processes for zero failure and waste. The empirical results presented herein are relevant to the environmental functionalization based on Industry 4.0 technology, and can be taken as an example of future impacts due to the introduction of new processes. With this Industry 4.0 technological upgrade, which requires a high level of digital training, it is possible to reduce both training time and costs in order to improve productivity, thus taking a significant step toward the factory of the future (i.e., the smart factory).

This paper can provide guidance for managers and policy makers in selecting the optimal operative procedure for the implementation of smart systems and for maintenance with the best human– machine interactions possible.

《Acknowledgements》

Acknowledgements

This study was financially supported by the Grant 2017, Cariparma Foundation, Department of Medicine and Surgery, for the founded project: Center for Health Workers Medical Simulation and Training (centro per la simulazione in medicina el’addestramento degli operatori sanitari) where we started new tests in surgical training context. Center for Studies in European and International Affairs (CSEIA) grant for scientific publication to Ruben Foresti related to the containment risks approaches.

We extend our thanks to: Sidel S.p.a. Parma for the cooperation in the development of the AD system; CSEIA of the University of Parma for the award assigned to nZ-FAD applied to cover the costs of open-access publication; Prof. Antonio Mutti, Department of Medicine and Surgery, for the possibility to start test applying the GSM5 method to the School of Specialization in Medicine and Surgery at the University of Parma.

《Author contributions》

Author contributions

Ruben Foresti, conceptual design; Ruben Foresti and Matteo Magnani, experimental design, technology design, and implementation; Ruben Foresti, Stefano Rossi, and Nicola Delmonte, big data analysis, drafting and revision of the manuscript. All authors wrote, critically read, and approved the final manuscript.

《Compliance with ethics guidelines》

Compliance with ethics guidelines

Ruben Foresti, Stefano Rossi, Matteo Magnani, Corrado Guarino Lo Bianco, and Nicola Delmonte declare that they have no conflict of interest or financial conflicts to disclose.

京公网安备 11010502051620号

京公网安备 11010502051620号