《1. Introduction》

1. Introduction

Given the emergence of intelligent manufacturing capabilities, we may wonder whether design for manufacturing (DFM) practices should be reconceptualized to take advantage of these capabilities. And, if so, what should design for intelligent manufacturing (DFIM) be? The integration of cloud computing, data analytics, artificial intelligence (AI), and the Internet of Things (IoT) with advanced manufacturing technologies has enabled the emergence of what has been called new-generation intelligent manufacturing [1]. Such new-generation intelligent manufacturing capabilities will enable transformational new products and services with unprecedented levels of quality, responsiveness, and efficiency.

At the same time, products are becoming more intelligent. Smartphones, self-driving cars, smart appliances for the home, and many other products have revolutionized customer expectations regarding product functionality and interactivity. A smartphone product is not simply the physical device itself; rather, it is a complex combination of the device, user interaction languages, a communications infrastructure, and a computational infrastructure. Furthermore, the customer does not simply use the physical device for its physically enabled functionalities; rather, the customer utilizes services that are enabled by the device and by the infrastructures supporting it.

The development of new-generation intelligent manufacturing systems (NGIMSs) goes hand-in-hand with the development of transformational products. Both leverage new technologies for computation and communications. Such products are enabled by new-generation intelligent manufacturing, as well as by increasing demand for manufacturing capabilities, which spurs technological advances. These products become embedded with the services expected by customers. As a result, product design can no longer be restricted to the design of a physical device. Instead, design must encompass the device, the services enabled by the device, and the infrastructures that support those services. The purpose of this paper is thus to explore the new paradigm of DFIM that encompasses the design of the product–service–system (PSS) and the design of the intelligent manufacturing infrastructure to support it.

Traditionally, DFM and design for assembly (DFA) have focused on understanding manufacturing process constraints and how to design for process capabilities and around those constraints [2]. In contrast, design for additive manufacturing (DFAM) expands upon DFM/DFA methods to focus on how to take advantage of the unique capabilities of additive manufacturing (AM) [3]. That is, designers are encouraged to explore new design concepts creatively in order to design products that cannot be manufactured economically by means of conventional processes. DFIM fits into this overall design for ‘‘X’’ context in that it is more similar to DFAM than to DFM/DFA, but represents a significant expansion in scope. In DFIM, designers can explore new services and business opportunities, as well as new physical devices. However, they should consider the limitations of user interfaces, communication and computational infrastructures, and manufacturing processes during the design process, in a manner consistent with traditional DFM/DFA practices.

This paper presents a complete description of the new-generation intelligent manufacturing context for this discussion, and then describes the characteristics of the new generation of smart, transformational products. An introduction to PSS design provides important methodology, which is covered next. A framework for DFIM is offered that connects all the dots together into a coherent whole to conclude the paper.

《2. Intelligent manufacturing context》

2. Intelligent manufacturing context

Much has been written about intelligent manufacturing systems over the past 20 years and more. The incredible growth in computing capacity and capabilities, combined with networking and AI advances, warrants a modern consideration of manufacturing technologies in light of the convergence of communications, computation, and AI technologies. Zhou et al. [1] offered an important treatise on intelligent manufacturing and its evolution over time. They identified three main phases of development: digital manufacturing, digital-networked manufacturing, and new-generation intelligent manufacturing.

The widespread adoption of computers heralded a new era of manufacturing that saw the development of numerical control (NC) and computer-numerical control (CNC), which was the advent of digital manufacturing. When computer networks and the Internet became prevalent, a second phase emerged in the form of digital-networked manufacturing, where machines, work cells, factory floors, and even enterprises and supply chains could be integrated. The concept of cyber–physical systems emerged, with the cyber system serving as an intermediary between the human and the machine tool. Over time, the cyber system took on more and more tasks, thereby providing a level of automation to free human operators from much of the detailed manufacturing work.

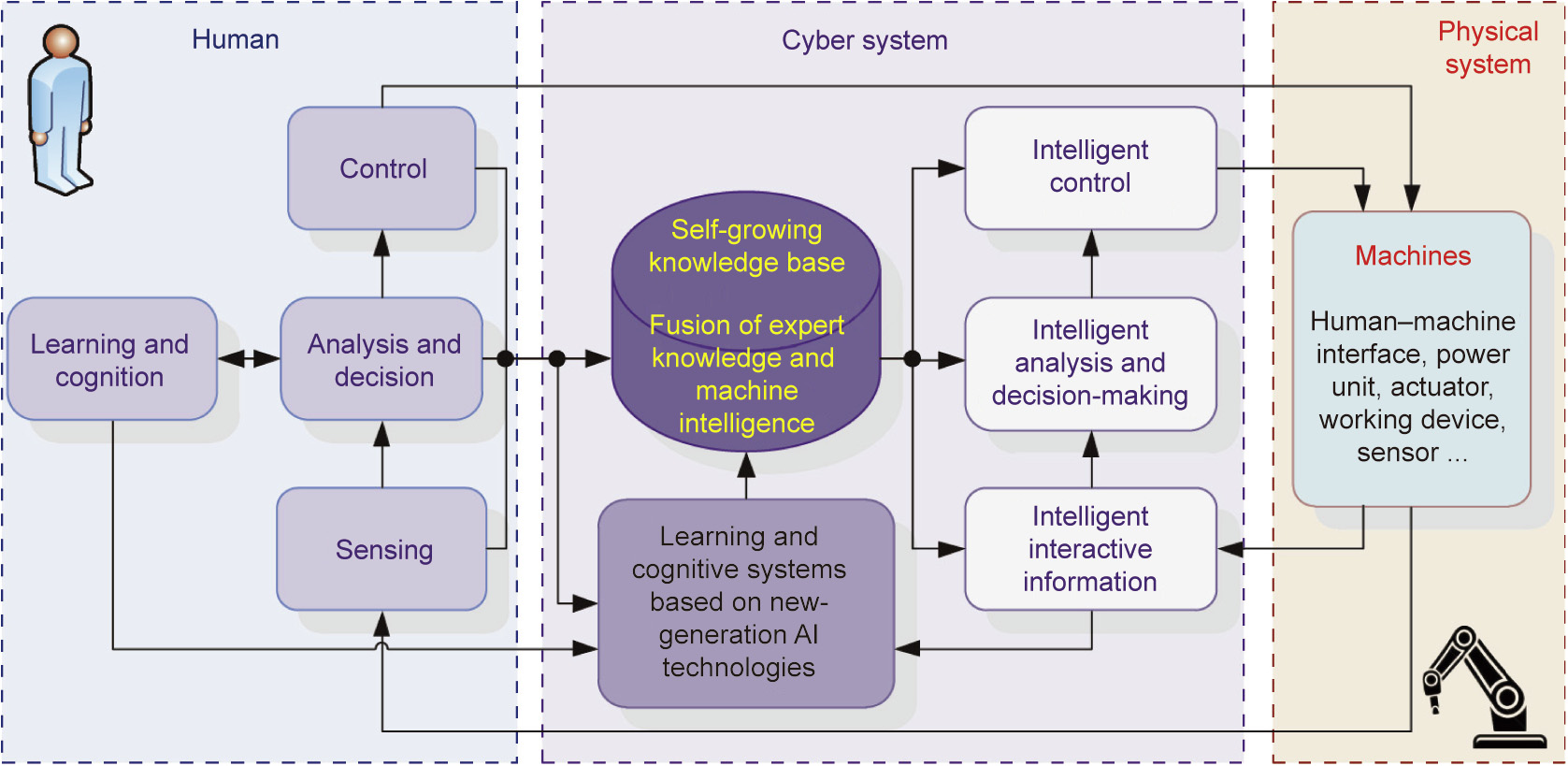

The third phase, that of NGIMS, leverages the rapid development of AI and machine learning technologies to add an element of intelligence and supervision to the cyber system intermediary. Furthermore, we are starting to see machine learning technologies being applied to enable NGIMS to learn and adapt over time in order to increase their automation capabilities. Zhou et al. [1] illustrated NGIMS as shown in Fig. 1. The cyber system depends on extensive sensors on the machines and factory floor to obtain a real-time (or near real-time) view of the manufacturing system. Using this data stream, NGIMS can analyze many aspects of operations, identify and diagnose problems, make some decisions, and exert a level of control over the system without burdening human operators and managers with mundane issues. By integrating machine learning technologies, it is expected that NGIMS can learn over time to improve their performance and, perhaps, take on additional roles and responsibilities.

《Fig. 1》

Fig. 1. Schematic of an NGIMS [1].

As is well known, the term ‘‘Industry 4.0” has been applied to this emerging class of NGIMS and the enterprise practices enabled by NGIMS. It is important to understand that future manufacturing systems will comprise hybrid systems of human and robotic operators; additive, subtractive, and forming processes; and cyber and physical systems [4]. An important constraint to consider is the scalability of physical (non-cyber) technologies and systems, since computation and communication systems seem readily scalable.

What is important from the perspective of DFIM is the improving capabilities of manufacturing systems, their reliability and dependability, and the various developed technologies that can be leveraged for new intelligent products. The computing infrastructure required to support NGIMS is very significant, as is the communications infrastructure. Central to the cyber system in NGIMS is the concept of a digital twin—that is, the digital representation of a machine, work cell, production line, or factory. Data analytics and simulations can be executed on these digital twins to extract knowledge about their state, predict maintenance needs, anticipate significant operational issues, and so forth. These same concepts and capabilities can be extended to intelligent products, and impose significant issues and opportunities onto the design of those products.

《3. New generation of products》

3. New generation of products

It is likely that we will see a stream of new products that follow the model of smartphones—that is, products that consist of the combination of a physical asset with support from extensive online systems of information and computational capabilities, in order to provide a wide range of services. New-generation products have the following characteristics:

• Adaptivity and responsivity to customers and operating environments;

• Incorporation of embedded sensors that are connected to the IoT;

• Presence of a digital twin of the product;

• Provision of automatic updates of software and cloud-based functionality;

• Provision of predictive maintenance;

• Presence of a combination of the physical product and services.

We are seeing the integrated development of the productionbased service industry and the service-oriented manufacturing industry. The current trend is toward their convergence; companies will span a spectrum of product and service offerings. To design such complex systems, methods from PSS design can be leveraged.

《4. Product–service–system design》

4. Product–service–system design

The PSS concept was introduced in the late 1990s. One notable definition is ‘‘innovation strategies where instead of focusing on the value of selling physical products, one focuses on the value of the utility of products and services throughout the product’s life period” [5]. According to this definition, Maussang et al. [6] stated that a PSS consists of physical objects and service units that relate to each other, and that its main focus is to provide functionality to the customer. They further explained that ‘‘the physical objects are functional entities that carry out the elementary functions of the system, [and] the service units are entities (mainly technical) that will ensure the smooth functioning of the whole system.” Several types of PSS have been defined. Of these, the use-oriented and result-oriented categories are of particular interest since they focus on the usage or service that is delivered, rather than strictly on the product itself [7]. With the result-oriented type of PSS, customers buy services rather than the product itself, although the product is typically delivered for the customer to use [8].

The prominent PSS design method was developed to provide engineering designers with specifications related to the requirements of the system as a whole [6]. In order to develop a successful PSS, the whole system must be considered, including the physical objects and service units. The functional analysis approach [9], which utilizes a graph of the interactors along with functional block diagrams, can be used to bridge the gap between the system approach and product development. The structured analysis and design technique representation has also been applied to describe a scenario based on a set of sequential activities. In this proposed model, operational scenarios are applied to describe systems once the physical objects and service units on the main level have been identified. These tools, together with functioning scenarios, have been proposed for use in designing consistent PSSs. Customer needs are captured by the concept of receiver state parameters [7] to characterize the change of state for a customer. This change of state represents the value that is perceived by the customer. The topic of designing PSSs is continued in Section 6, where a framework for NGIMS design methods is discussed.

《5. Framework for DFIM》

5. Framework for DFIM

This paper proposes a framework for DFIM as a PSS comprised of an intelligent product and a back-end system, derived from NGIMS. This framework is presented below with an overview followed by an operational view. Some industry examples of PSSs and digital twins are described briefly.

《5.1. Overview》

5.1. Overview

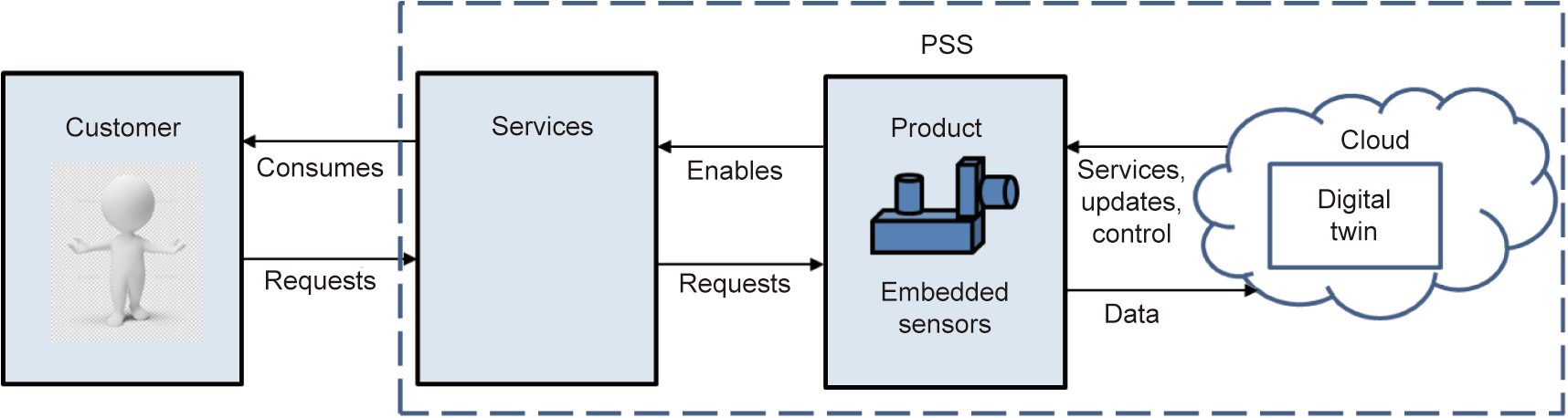

From a high-level perspective, we see customers interacting with a PSS. That is, customers request services by interacting with the physical product, and then consume those services. This process is illustrated in Fig. 2. Within the PSS, the ‘‘services” portion acts as an intangible intermediary between the customer and the product. This portion of the PSS plays the role of the user interface of the product, which allows the customer to request services and then receive and consume them.

《Fig. 2》

Fig. 2. High-level schematic of the interactions within a PSS.

On the back-end of the PSS, embedded sensors in the product communicate with a cloud-based product support environment. A digital twin of the product is maintained by collecting sensor data. Data analytics can be used to extract important usage data and update the product state. Simulations of the product in its current state can be performed to determine, for example, whether maintenance is required or software updates are needed. To the extent that the cloud-based environment contributes to the delivery of services, data analytics can help determine whether upgrades to the service-providing software are needed.

The operation of a current PSS, such as a smartphone, is clearly illustrated in the schematic in Fig. 2. If the customer wants to find a new app, for example, they navigate through the phone’s user interface to the App Store icon, start it, and then browse through the apps that are retrieved. On the back-end of the PSS, an extensive cloud-based system is required to collect, catalogue, archive, validate, and retrieve apps. However, most current PSSs lack a digital twin and services that are enabled by keeping track of the product’s state. This situation is likely to change soon.

《5.2. Operational view》

5.2. Operational view

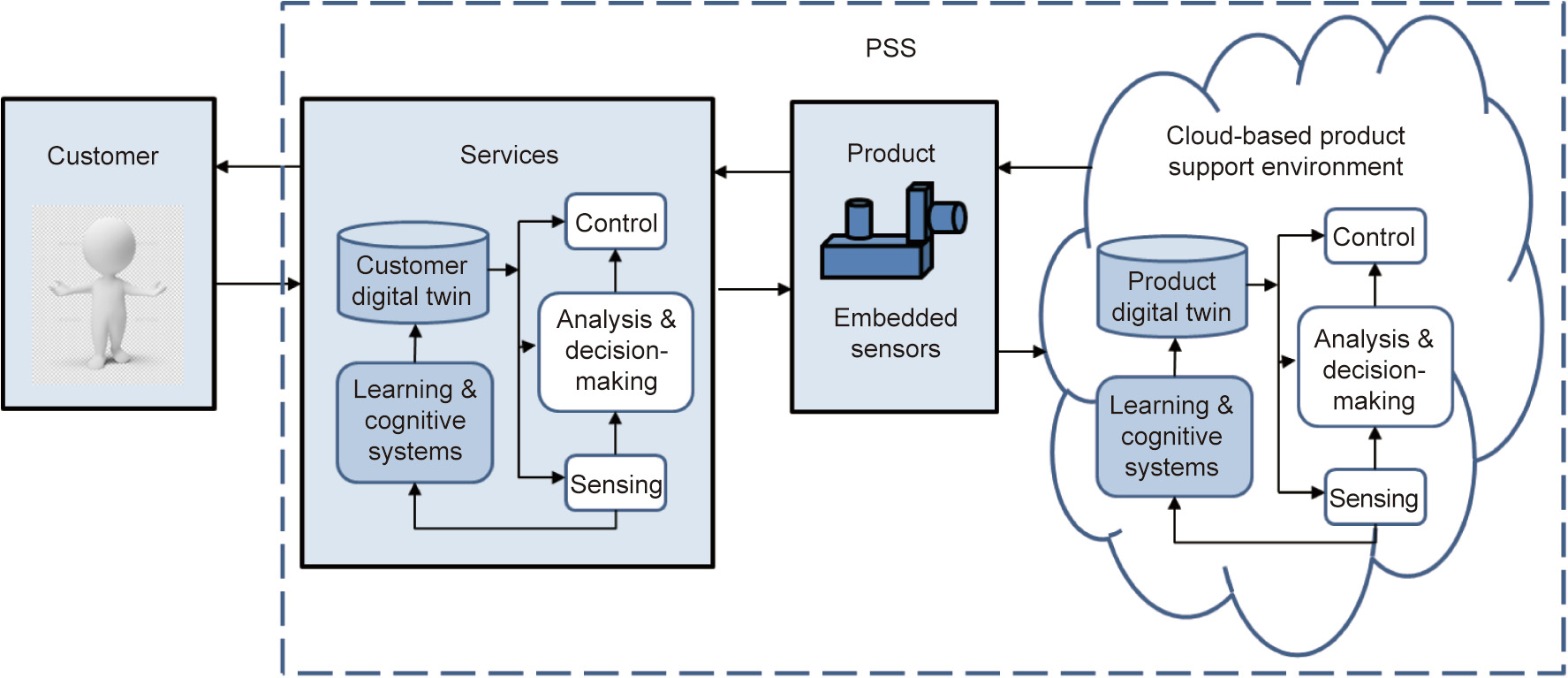

A closer look at the operations of PSSs will provide greater insight. Consider first the back-end cloud-based product support environment. As mentioned earlier, the digital twin is a representation of the product that is updated based on data streaming into the environment from sensors in the product. To update the product state, perform data analytics, and perform product simulations, the environment must have some control and decision-making capabilities. Ideally, an intelligent environment should have some executive oversight capabilities so that it can learn over time to improve its performance and the PSS’s performance.

Similarly, the PSS should have the ability to learn about the customer in order to provide better and more tailored services. In other words, the PSS should have a digital twin of the customer and use this representation as the basis for data analytics and simulations.

Fig. 3 shows a more detailed view of the PSS and customer interaction that includes operational elements within the ‘‘services” portion and the cloud-based environment. Similar to the operations and learning loops in Fig. 1, it is expected that future intelligent PSSs will have digital twins of the product and of the customer that are updated and analyzed. Control, analysis, and decision-making activities are enabled by sensor data and, in turn, enable the PSS to be responsive and adaptive to the customer and to the product’s usage environment. Furthermore, the PSS will have the ability to learn over time.

《Fig. 3》

Fig. 3. Schematic of an intelligent PSS at the operational level.

Of course, the customer also possesses control, analysis, and decision-making abilities and learns over time about the product and its services (this is not shown for reasons of clarity).

《5.3. Examples》

5.3. Examples

Several industry examples have been described of digital twin technology being applied to intelligent PSSs. The General Electric Company embeds sensors into its power-generation turbines— and, more recently, into its aircraft engines—in order to collect real-time data [10]. Combined with novel inspection robots, the company can apply data analytics to predict maintenance needs and make recommendations to customers. These data and predictive models can be utilized when developing new products.

Tesla constructs a digital twin for each car it sells [11]. Data collected from the car are analyzed, issues are identified, and software updates are prepared to address those issues. Providing software updates over the Internet allows customers to continue to use their cars without the need for a service visit, which significantly improves the user experience. In the future, as Tesla and other companies continue to develop autonomous vehicles, it is easy to imagine that data on driving conditions (i.e., day/night and weather), road properties (e.g., curves and up/down-hill), and driver actions, coupled with the occurrence of accidents, could be aggregated and analyzed to improve the car model’s performance. Furthermore, data from a single car could be analyzed to provide fine-tuning of the vehicle’s actions. For the conventional human driver mode, the maintenance of a digital twin of the driver, in addition to one of the car, would enable further fine-tuning of the car’s performance for difficult situations, based on typical driver reactions.

During new product development, a company that possesses data from its vehicles being driven over millions of kilometers will be able to simulate vehicle performance and driver responses to evaluate the effects of proposed design changes. More generally, the collection of product usage data and of data on users’ actions and responses will enable the development of simulation models that can inform design decisions, explore tradeoffs among design alternatives, and predict levels of market acceptance.

《6. Implications for DFIM》

6. Implications for DFIM

《6.1. Underlying principles》

6.1. Underlying principles

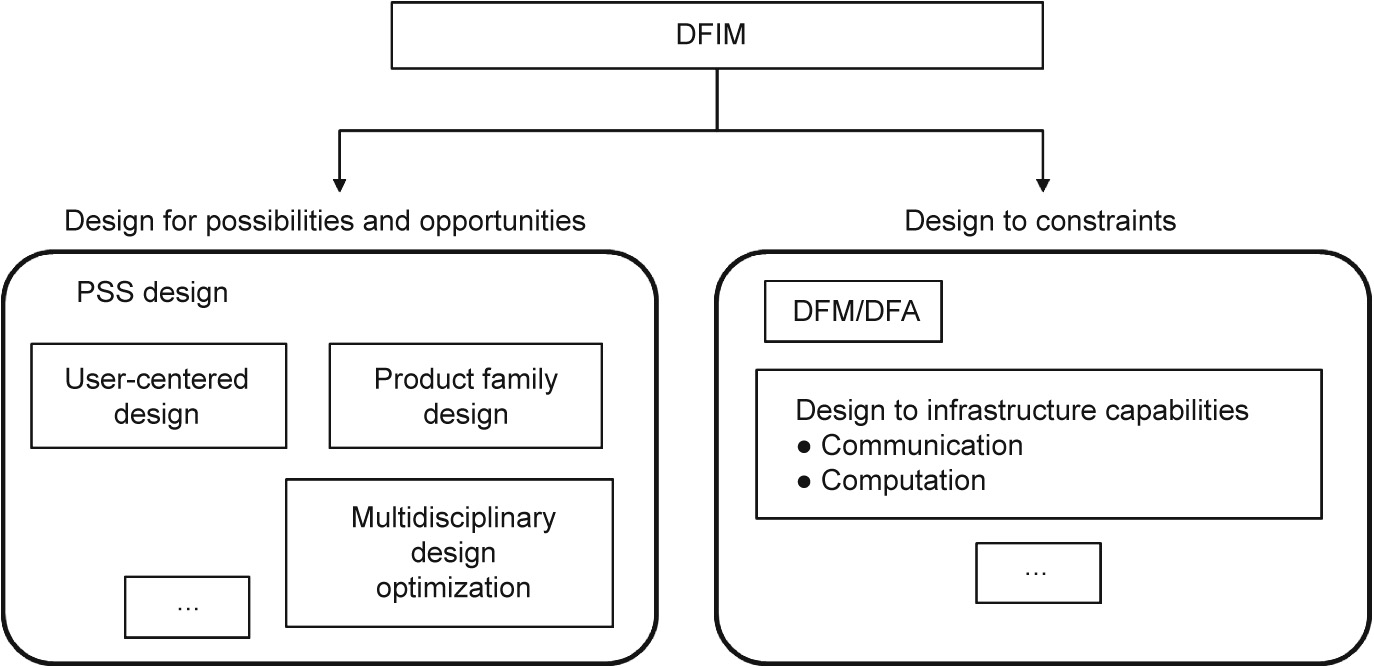

Thus far, this paper has explained what an intelligent PSS is, and that it shares technologies and characteristics with intelligent manufacturing systems. But what about DFIM? As presented here, DFIM has little in common with conventional DFM. DFIM has more in common with DFAM, in that DFAM has two main objectives: to explore new design spaces in order to take advantage of the unique capabilities of AM, and to design the physical artifact in a manner that satisfies manufacturing process constraints. As a consequence, DFIM focuses much more on how to take advantage of intelligent manufacturing system capabilities and technologies than on manufacturing constraints. This view of DFIM as consisting of two different aspects is illustrated in Fig. 4; the two aspects are design for possibilities and opportunities, and design to constraints.

《Fig. 4》

Fig. 4. DFIM as comprising two aspects: design for possibilities and opportunities, and design to constraints.

Before exploring these aspects further in subsequent sections, some underlying principles of DFIM are identified below:

• DFIM encompasses the design of future intelligent PSSs that will be manufactured by intelligent manufacturing systems.

• The scope of the DFIM task is to design much more than a physical product; it requires the design of services to be offered through a physical device, in addition to the design of the back-end product support environment and the design of the physical product.

• Since developing the product support environment requires a very significant investment, designers should plan to design several product families and generations of products in order to amortize the investment.

《6.2. Design for possibilities and opportunities》

6.2. Design for possibilities and opportunities

This first aspect of DFIM focuses on the idea of designing for the possibilities and opportunities that are afforded by new product and service concepts and new technologies. This aspect is very much about creatively searching for solutions for new and emerging customer needs.

The proposed foundation for DFIM is based on the methodology of PSS design. Other design methodologies support PSS design methods that are found in the engineering, business/marketing, and industrial design literatures. Three specific methodologies are highlighted here as being of great importance, but many others are relevant as well.

According to the PSS literature [12], PSS design methods involve the identification of ① customer-oriented functions to be provided by the PSS, ② measures of customer value, ③ use scenarios, ④ function structure for the PSS, ⑤ product architecture, and ⑥ specifications of product elements. For our purposes, we should adapt this generic method for intelligent PSSs that are supported by significant product support environments.

The first three elements of the PSS design method highlight the importance of identifying the services that customers want and expect, and then developing metrics for measuring the value that customers place on these services. The critical consideration is to plan for a years-long PSS lifetime, by imagining what customers will want over that lifetime, and imagining what new services are enabled by a PSS system that learns over time about both the products and the customers. User-centered design methods [13] can certainly be applied, albeit with a twist: Designers should consider how customer expectations and requirements, and the capabilities of the PSS system, will evolve over time. Taken to an extreme, methods for involving customers in the design of their own products are also being pursued [14].

When designers consider the last three PSS design elements, they need to develop the PSS architecture and make important decisions about how to allocate functions to the physical product, the user interface, and the back-end environment. They also need to consider the evolution of the communications and computational infrastructures over the PSS lifetime. For example, designing for a 5G wireless network will be very different than designing for 4G. The allocation of functions to the PSS elements may also evolve over time, and designers should consider this potential evolution as well. These issues were highlighted in the Tesla example, as that company can identify all opportunities for remotely improving vehicle performance through software updates. How much vehicle functionality should be accessible by the manufacturer? How much should be customer-tunable? Is the communication infrastructure capable of handling the burdens of data collection and software upgrades? These and many other questions must be addressed during the design process.

Design methodology literature includes an extensive body of research on product family design, which is the idea of designing a group of similar products with a range of functionalities, capabilities, and/or sizes [15]. The product family will likely share core technologies, features, or components. For example, cars are designed as product families, with a range of sizes (small, medium, large), styles (coupes, sedans, sport cars), and price points. Product family design methods involve the identification of product architectures that are suitable for providing the desired range of capabilities, while typically sharing as much technology and as many components as possible [16]. The identification of a common platform and options packages is a significant step in the design method [17].

A branch of product family design literature considers product family design over product generations (surveyed in Ref. [18]). In this case, the evolution of the product family is planned such that new functionality and capabilities are introduced over the various product generations. This method seems highly relevant to intelligent PSS design, in order to ensure that the back-end environment can support future product generations as the PSS evolves.

The field of multidisciplinary design optimization (MDO) [19] may also play a role in PSS design. The physical product, the service delivery system, and the back-end environment can be considered as different ‘‘disciplines” in the MDO context. That is, they are very different types of subsystems that need to be designed. During their design process, significant challenges must be addressed: The subsystems are coupled to one another and need to integrate together to form a tightly coupled system from the customer’s perspective. Although originally developed for the aerospace industry, MDO methods have had widespread application [20]. In aerospace, the coupled competing subsystems tend to include structures, propulsion, aeronautics, and payload. MDO methods involve the formulation and solutions methods of large-scale, hierarchical, and coupled optimization problems. Perhaps these methods could be adopted for the optimization of PSSs after their architectures have been determined.

《6.3. Design to constraints》

6.3. Design to constraints

The other perspective of DFIM involves designing to the intelligent manufacturing system capabilities and avoiding its constraints. From this perspective, we are primarily concerned with the design of the physical product, which will be manufactured using the NGIMS. We can expect the NGIMS to be capable of consistent, reliable manufacturing capability, since the systems will be monitored extensively (using many sensors), problems will be identified quickly, and the systems will learn and improve over time.

Products should be designed taking into account the capabilities and limitations of the relevant manufacturing processes. In one sense, this is straightforward DFM. However, it is necessary to delve deeper into the consideration of expected market sizes, response time expectations, and customization opportunities.

It is useful to separate product manufacture into two categories: the product family platform, which will be manufactured in high volume, and the components and modules that provide differentiation for individual product models, product generations, or even customized products. The former case falls under conventional DFM, and standard approaches for DFM and DFA should be practiced. The latter case represents much more challenging issues on the complexities that arise from the combinatorial explosion of options. These two categories are explored further below.

Since the product family platform will be present in every product, components in the platform can be expected to be produced in much larger quantities than some specialty options. As such, conventional manufacturing processes may be appropriate for these components if they are manufactured in high volumes. Investments in hard tooling and human-based process planning can be recouped in high volume production. Nonetheless, product developers need to select manufacturing processes that are capable of fabricating parts to the expected quality, economically, and fast enough to satisfy customer delivery times. Alternatively, the product development organization may decide to outsource much or all of the platform, depending on whether or not the organization believes it can deliver the highest value in the product family options or in the platform.

For the components and modules, decisions on manufacturing processes largely depend on expected production volumes. For low volume options, manufacturing processes that do not require much hard tooling or manual process planning will be favorable. Injection molding dozens or even hundreds of parts for a specialty product option will result in costly parts with long lead times. In contrast, the usage of AM processes, which require no hard tooling and a minimum of human intervention in manufacturing, may have many benefits. Typically, part costs for AM are constant relative to production volume, and lead times are several days or less. For metal parts, five-axis machining may also be favorable, provided that part-specific fixtures are not needed and process planning can be automated. Selection methods for manufacturing processes are well developed [3,21] and are even being standardized [22].

Of course, design constraints also arise from limitations on the computational and communication systems being designed as part of the PSS. These constraints need to be quantified and considered during PSS design. Their evolution throughout the expected life of the PSS should be considered as well. However, it is expected that the computation and communication system constraints will not play as great a role as the manufacturing constraints for the physical products.

《7. Conclusion》

7. Conclusion

This paper considers the design of future intelligent products from the perspective of the NGIMS that will produce them. This work is intended to be thought-provoking and speculative. If we imagine a future of smart products, we can then consider what will be required to design and manufacture such products. The emergence of a new generation of intelligent manufacturing concepts, practices, and systems provides additional motivation to consider what DFIM might entail.

Throughout the paper, the following points are emphasized:

(1) DFIM starts with design concepts for new smart products.

(2) Smart products are PSSs; that is, smart products consist of physical products that deliver services to customers by interacting with large computational and communication infrastructures (i.e., utilizing cloud-based computing and IoT technologies). As such, DFIM is highly related to design methods for PSSs.

(3) DFIM comprises two main aspects:

• Design for possibilities and opportunities, which seeks to develop new design concepts and explore the design spaces enabled by these concepts (PSS design methods are the primary means for accomplishing this);

• Design to constraints, which leverages traditional DFM and DFA methods that seek to avoid constraints imposed by manufacturing process limitations.

(4) A comprehensive DFIM method was not proposed in this paper, since it is premature to do so. Additional investigations and design experiences are needed before reliable design methods will emerge for this domain.

It is hoped that the publication of this paper will promote the formation of a community of DFIM researchers that develops, debates, and refines design methods for future smart products that leverage NGIMS.

《Acknowledgements》

Acknowledgements

The author acknowledges support from the Digital Manufacturing and Design (DManD) Centre at the Singapore University of Technology and Design, supported by the Singapore National Research Foundation.

京公网安备 11010502051620号

京公网安备 11010502051620号