《1. AI has achieved significant development and increasingly become a common multidisciplinary technique》

1. AI has achieved significant development and increasingly become a common multidisciplinary technique

In recent years, various scientific and technological breakthroughs have become a reality. AlphaGo, developed by Google, defeated top Go players such as Lee Sedol and Ke Jie; driverless cars have traveled safely for millions of kilometers and obtained legal driving rights in more than ten states in the United States; and image- and speech-recognition techniques have gradually matured and been widely used in consumer products such as cameras and smartphones, bringing great convenience. As a result, the old term ‘‘artificial intelligence” (AI) has reappeared in the public sight, triggering a new round of technological revolution. Today, AI is included in the major development strategies of many countries, and is regarded as a core capability [1–4]. As AI is closely related to many fields, it is expected to become a model framework for interdisciplinary research and to further promote the coordinated development of various fields.

AI has existed for more than 60 years, since its birth at the Dartmouth conference in 1956. The development of its mainstream techniques has gone through three key periods: the reasoning period, knowledge period, and learning period. From 1956 to the early 1970s, AI research was in the reasoning period, and mainly concentrated on rule-based symbolic representation and reasoning; the representative achievements in this period were various automatic theorem-proving procedures [5]. However, with the increasing difficulty of problems, it was challenging for machines to be intelligent purely based on logical reasoning. Hence, some researchers turned from the exploration of general thinking law to the application of specialized knowledge, and AI research entered the knowledge period. From the early 1970s to the end of the 1980s, a large number of expert systems [6] were established and achieved remarkable results in specific application areas. However, with the expansion of the application scale, it was very difficult to summarize knowledge and then teach it to computers. Therefore, some researchers advocated having computers learn knowledge from data automatically, and AI research entered the learning period. Since the early 1990s, AI research has been devoted to machine learning theory and algorithm research [7]. Machine learning has not only made breakthroughs in traditional AI tasks such as image recognition [8–10] and speech recognition [11,12], but also played an important role in many novel applications, such as predicting the activity of potential drug molecules [13], analyzing particle accelerator procedure [14], reconstructing brain circuits [15], identifying exoplanets [16], diagnosing skin cancer [17], and predicting the effects of mutations in non-coding DNA on gene expression and disease [18,19].

The revival of AI can be attributed to three main factors. First, the significant progress of data acquisition, storage, and transmission techniques has resulted in big data, which is an essential resource for AI research. Moreover, the maturity of highperformance computing techniques and the emergence of powerful computing hardware (e.g., graphics processing units (GPUs) and central processing unit (CPU) clusters) have laid a solid foundation for the study of AI. Last but not least, researchers have accumulated abundant experience and skills in modeling large-scale complex problems, leading to the rapid development of machine learning methods represented by deep neural networks [20], which provide an effective approach to study AI. Today, AI is a multidisciplinary technique, which can be used in any area that requires data analysis.

《2. Deep learning is prevailing, but its physical mechanism remains unclear》

2. Deep learning is prevailing, but its physical mechanism remains unclear

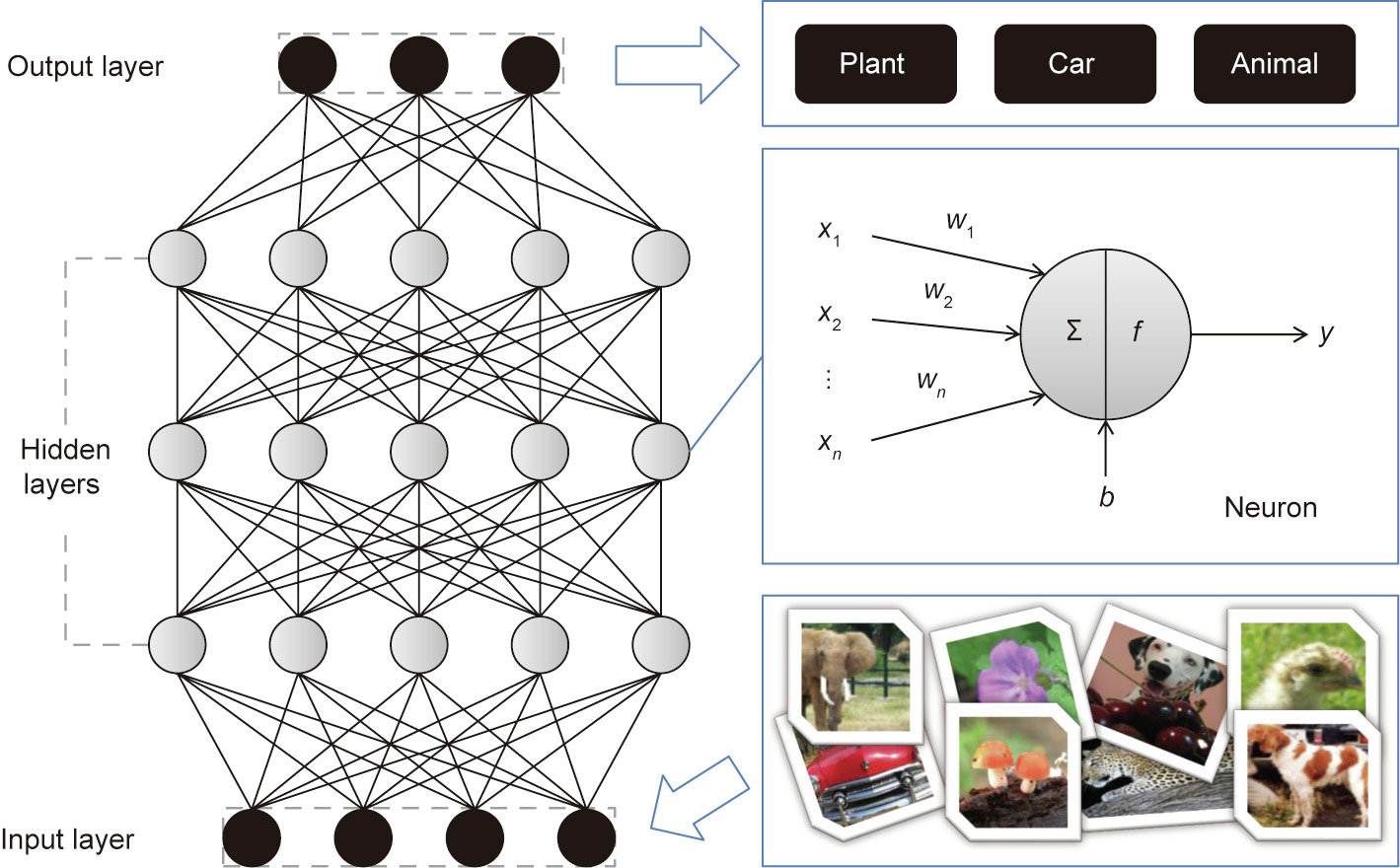

A deep learning model is actually a multilayer artificial neural network. To avoid confusion, it is hereinafter referred to as a deep neural network, the structure of which is shown in Fig. 1. The model consists of three main parts: the input layer, hidden layer (s), and output layer. Each node of the input layer corresponds to one dimension of the input data (e.g., a pixel of image), each node of the output layer corresponds to a decision variable (e.g., a semantic category), and the hidden layers are made up of many “neurons.”

《Fig. 1》

Fig. 1. An example of a deep neural network. Eight images on the bottom right of Fig. 1 was adapted from ImageNet (http://www.image.net.org).

In terms of biological mechanism, a neuron receives a potential signal transmitted by other neurons and will be activated and output a signal when the accumulated signal is higher than its own potential. This process can be formalized as  , where

, where  denotes a multidimensional input signal, y denotes a one-dimensional output signal,

denotes a multidimensional input signal, y denotes a one-dimensional output signal,  denotes the weight of the input signal, b denotes a bias, and f is an activation function. It can be seen that a deep neural network is essentially a mathematical model produced by nesting a simple function hierarchically. Although the deep neural network is inspired by neurophysiology, its working principle is far from the brain simulation depicted by the media. In fact, the working principle of the human brain has not yet been fully explored.

denotes the weight of the input signal, b denotes a bias, and f is an activation function. It can be seen that a deep neural network is essentially a mathematical model produced by nesting a simple function hierarchically. Although the deep neural network is inspired by neurophysiology, its working principle is far from the brain simulation depicted by the media. In fact, the working principle of the human brain has not yet been fully explored.

When many neurons are hierarchically organized as a deep neural network, this deep model is equivalent to a nested composite function, and each layer of the network corresponds to a nonlinear mapping (the output signal of the previous layer is used as the input signal for the next layer). Signal transmission throughout the network can be formally described as

, where

, where

respectively denote the weight matrix and the bias vector at the lth layer (i.e., the model parameters to be solved). Here, the model parameters are packed into matrices and vectors, since each layer of the network contains multiple neurons. Given the application task, a loss function (used to measure the difference between the actual and expected outputs of a deep neural network) should be designed first, and then the model parameters can be solved by optimizing the loss function with a backpropagation algorithm [21], so that a multilevel abstract representation collectively hidden in a dataset can be learned.

respectively denote the weight matrix and the bias vector at the lth layer (i.e., the model parameters to be solved). Here, the model parameters are packed into matrices and vectors, since each layer of the network contains multiple neurons. Given the application task, a loss function (used to measure the difference between the actual and expected outputs of a deep neural network) should be designed first, and then the model parameters can be solved by optimizing the loss function with a backpropagation algorithm [21], so that a multilevel abstract representation collectively hidden in a dataset can be learned.

Illustrated by statistical learning theory [22], the more numerous the parameters are, the higher the model complexity will be, and hence the stronger the learning ability will be. We can increase the model complexity of a deep neural network by “widening” or “deepening” it. The latter works better in practice. While “widening” only increases the number of basic functions, “deepening” increases not only the number of functions, but also the layers of function nesting, making it more powerful in view of functional expression. Therefore, “deepening” is more helpful in improving model complexity and learning ability. Taking the ImageNet competition in the computer vision field as an example, neural architecture is getting deeper and deeper, from the seven-layer AlexNet [8] to the 16-layer VggNet [9], and then to the 152-layer ResNet [10]. At present, the deepest neural network has reached thousands of layers, with the number of model parameters reaching as many as several billions.

It is worth noting that one crucial aspect of a deep neural network is how to design the neural architecture reasonably. Existing neural architectures have mostly been developed manually by human experts, which is a time-consuming and error-prone process. Therefore, a great deal of effort is currently being put into automatic machine learning (AutoML), with a particular focus on methods of automated neural architecture search (NAS) [23]. The concept behind NAS is to explore a search space—which defines all of the architectures that can be represented in principle—by Bayesian optimization [24], reinforcement learning [25], or neuroevolutionary learning [26], with the goal of finding the architectures that achieve high predictive performance on unseen data.

Although deep learning has achieved many successes, the interpretability of such a model remains unclear. When researchers apply a deep neural network to model their problems, they regard it as a ‘‘black box” and only focus on the input and output. Most employed neural architectures are designed entirely based on the experience and intuition of the researchers, which fail to link the problem to be solved with its physical background. Although the calculation process of a deep neural network can be explicitly represented by a mathematical formula, it is difficult to explain it at the level of physical meaning. The model lacks a physical connotation that can reflect the essence of the problem. Even if AutoML is helpful to find ‘‘better” neural architectures in terms of predictive performance, it still cannot be physically explained. A few researchers have tried to explain a deep neural network in terms of some specific application tasks. Taking image recognition as an example, researchers performed a de-convolution operation on a deep convolutional neural network (CNN) to visualize the visual features learned by the layers, hoping to explain the microscopic process of image recognition [27]. However, such a heuristic explanation is not universal and can hardly be extensively applied to other cases; furthermore, it fails to reveal the physical mechanism of the model. Therefore, the interpretability of the deep neural network is a bottleneck in its further development.

《3. Mesoscience is expected to be a possible solution to reveal the physical mechanism of deep learning and further promote the development of AI》

3. Mesoscience is expected to be a possible solution to reveal the physical mechanism of deep learning and further promote the development of AI

Associating the design of a deep neural network with the physical mechanism of the problem to be solved is a prerequisite for realizing breakthrough progress in AI, and the universality of the physical mechanism determines the scope of AI applications, which is a fundamental problem for AI in the future.

Mesoscience is based on the idea that complexity originates from the compromise in the competition between two or more dominant mechanisms in a system, resulting in a complex spatiotemporal dynamic structure [28]. Almost all systems studied in AI research are complex systems. Introducing mesoscience principles and methods into AI research (mainly in respect to deep neural networks) might be a promising way to address the aforementioned problems.

Starting with the study of gas–solid fluidization in chemical engineering [29], mesoscience has been consecutively applied to studies in gas–liquid fluidization [30], turbulence flow [31,32], protein structure [33], catalysis [34], and so forth. The universal law has now been gradually summarized. The main spirit of mesoscience can be summarized as follows [35,36]: In general, a complex problem has multiple levels, each of which has multiscale characteristics, and different levels are related to each other. A complex system consists of countless elements, and there is likely a spatiotemporal multiscale structure between the system and element scale due to the collective effect of elements. There are three types of regimes in such a structure (taking the case of a system controlled by two dominant mechanisms as an example), with completely different properties when the boundary and external conditions are changed:

A–B regime: This regime is jointly controlled by physical mechanisms A and B, and is known as the mesoregime. The structure of the mesoregime shows the alternation of the two states, which is controlled by the compromise in competition between mechanism A and mechanism B. In this regime, the system structure conforms to the following:

A-dominated regime: As the external conditions change, mechanism B disappears and mechanism A alone dominates the system. In this case, the system structure characteristics are simple and in line with the following:

B-dominated regime: As the external conditions change in the opposite direction, mechanism A disappears and mechanism B dominates the system; the system structure now conforms to the following:

Most importantly, the transition between the A, A–B, and B regimes is often accompanied by sudden changes in the system’s characteristics and function.

The problem handled by deep learning can often be regarded as a complex system. The correlation between the input and output of such a system is usually modeled as a nonlinear nested map. Using mesoscience theory to examine existing deep learning models, this paper proposes the following research and application mode of mesoscience-based AI:

Supposing that there is a huge training dataset, we want to establish a model to express the inherent laws of the dataset through deep learning. According to the concept and logic of mesoscience, the following steps should be taken:

(1) Analyze these data to determine how many levels they involve.

(2) For each level, analyze the existence of three regimes.

(3) If it belongs to regime A or regime B, the structure is simple and can be solved by the existing deep learning technique.(4) If it is in the A–B mesoregime, the system has a significant spatiotemporal dynamic structure. It is necessary to analyze its physical dominant mechanisms first; next, use a multi-objective variational model for the two or more mechanisms with the classical gradient descent to help the model training.

(5) After analyzing each level, carry out association and integration among different levels.

Examining the above steps, we found that, for problems belonging to regime A or regime B, the extreme conditions are relatively simple from the mesoscience perspective. Thus, the existing deep learning techniques can be used to quickly iterate to the solution to establish the mathematical model. However, for problems in the A–B mesoregime, the correlation between the input and output is controlled by multiple—at least two—physical mechanisms. Therefore, a time-consuming parameter-learning procedure is inevitable, if the conventional deep learning method is used. Alternatively, if the physical mechanism is decomposed first, according to the concept of mesoscience, and the multi-objective variational method is adopted to analyze different control mechanisms, coupled with the classical backpropagation algorithm, the deep learning solution satisfying the error condition can be obtained more quickly.

For complex problems dealt with by deep learning, if the control mechanism is analyzed first at the physical level, the model can be established following the above steps. This is helpful to speed up the model training and facilitate a deeper understanding of the physical nature of the system.

A general method to address the intrinsic problems in AI may be to introduce the analytical and processing means of mesoscience into deep learning, such as region decomposition, dominant mechanisms identification, and the multi-objective variational method.

《4. The problem-solving paradigm of AI can be improved by mesoscience》

4. The problem-solving paradigm of AI can be improved by mesoscience

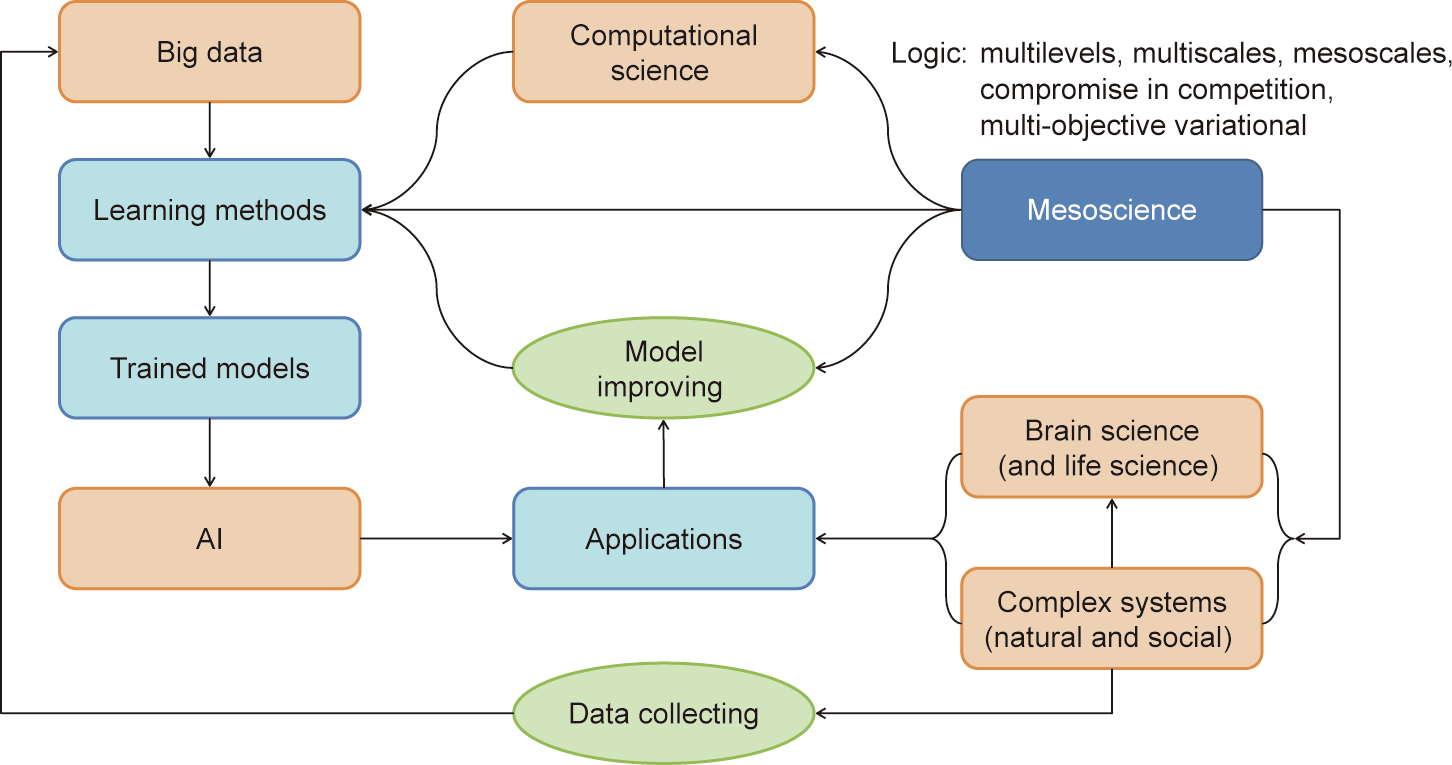

A general flowchart of AI theoretical and application research based on deep learning is shown in Fig. 2. The main steps can be summarized as follows:

《Fig. 2》

Fig. 2. Schematic of existing AI research and applications.

(1) Collecting training data: Gather (sufficient) data from application scenarios (often complex systems), and label the dataset if supervised learning is involved.

(2) Constructing a deep neural network: Choose a suitable neural architecture and optimization algorithms to train a statistical model that can capture the potential patterns hidden in the dataset.

(3) Applying the model: Predict results for new data using the well-trained model.

The essential step in such a flowchart is to construct the deep neural network. At present, researchers entirely rely on their own experience and intuition to complete this step, due to the ‘‘black box” issue inherent in artificial neural networks. To this end, we suggest that mesoscience principles and methods could be considered for the construction of a deep neural network. The resulting improved flowchart is shown in Fig. 3.

《Fig. 3》

Fig. 3. Schematic of mesoscience-based AI research and applications.

Solving the problem of complex systems is a critical goal of AI, as illustrated by Figs. 2 and 3; complex systems provide both application scenarios and massive datasets for AI. The human brain is also a complex system. On the one hand, brain science [37]—that is, studying the material basis and mechanism of human thinking—is an important support for the future development of AI. On the other hand, improving our understanding of complex systems will also help brain science research. The recent success of the deep learning technique can be regarded as a mathematical success. If the research results of brain science can be integrated into AI in the future, this will inevitably and significantly promote the research and application of AI.

Brain scientists have been trying to reveal the secrets of the human brain, not only from the perspective of biology and anatomy, but also in terms of the development of the cognitive mechanism. By combining this knowledge of how intelligence occurs with advanced computer hardware and software technology, it is possible to build an ‘‘artificial brain” that may be comparable to the human counterpart. However, different people use their differing abilities to solve problems with their brain after receiving corresponding education and training, even though different peoples’ brains have identical structure and functions. The key is that when a problem arises, people make use of their understanding of the problem’s physical nature together with their brain’s reasoning and induction abilities to obtain the correct solution within a limited time. Therefore, the ability of AI to solve practical problems should depend on the progress of brain science, the development of information technology (IT), the understanding of the physical nature of the problems, and the effective integration and coupling among them.

Although mesoscience originates from the field of chemical engineering, its basic principles are pervasively applicable to other complex systems. The core concept of mesoscience is to find the multilevel correlations and multiscale associations in the system, as well as to identify the mesoregimes and their physical dominant mechanisms in different levels. Next, the multi-objective variational method is used to seek the law of compromise in competition of the dominant mechanisms in order to solve the problem. In the new paradigm illustrated by Fig. 3, mesoscience plays an important role in improving the model architecture and learning algorithm, in addition to improving related computing hardware and computational methods.

From the mesoscience concept to AI applications, many issues remain to be explored. For example, Google’s AlphaGo Fan [38] adopted deep reinforcement learning—which integrates the perception ability of deep learning with the decision-making ability of reinforcement learning—to beat the human Go world champion. By combining the deep reinforcement learning technique with the Monte Carlo search strategy, AlphaGo assesses the current board situation through the value network to reduce the search depth, and uses the policy network to reduce the search width, in order to improve the search efficiency. AlphaGo is a successful application example of deep reinforcement learning. From the perspective of system structural analysis, deep reinforcement learning can be divided into three levels: ① tens of thousands of perceptrons, ② several deep learning networks, and ③ deep reinforcement learning strategies. These levels coincide with the three scales in mesoscience: ① element scale, ② mesoscale, and ③ system scale. It is worth investigating whether it is possible to directly apply analytical methods to deep reinforcement learning.

Notably, DeepMind has developed four main versions of AlphaGo: Fan, Lee, Master, and Zero. The earlier versions of AlphaGo such as Fan and Lee [38] are trained by both supervised learning and reinforcement learning, while the latest version AlphaGo Zero [39] is solely trained by self-play reinforcement learning without any human knowledge, and use a single deep neural network rather than separate policy and value networks. Here, we take only AlphaGo Fan as an example, for two reasons: First, AlphaGo Fan [38] is the most complicated version, and this paper focuses on analyzing complex systems; thus, AlphaGo Fan is the most typical case among the four versions. Second, no matter whether policy and value networks are separated (e.g., AlphaGo Fan and Lee [38]) or merged (e.g., AlphaGo Zero [39]), they still correspond to the mesoscale in accordance with the mesoscience concept.

Another example is the generative adversarial network (GAN) [40], which is one of the most popular and successful deep learning models. GAN performs learning tasks by means of a mutual game between the generative model and the discriminative model. GAN’s goal is to generate pseudo data consistent with the distribution of real data by using a generative model with the help of a discriminative model. These two models have their own goals as well; the generative model attempts to generate data that can deceive the discriminative model, while the discriminative model strives to distinguish the generated data from the real data. In the process of establishing the GAN, the two models are mutually restrained, and each tries to lead in the direction of its own advantage. Finally, under the constraint of the GAN objective function, the two models reach equilibrium and compromise with each other. If the behavior of the two models is regarded as being equivalent to the two dominant mechanisms, A and B, in the mesoregime, then the GAN’s training is the process of compromise in the competition of two dominant mechanisms in the mesoregime. In this way, the spirit of mesoscience may be beneficial when training a GAN model and further boosting its applications.

Through an analysis of the progress of AI and big data during the past years, two conclusions can be drawn, as shown in Fig. 3: ① With the continuous development of brain science, the working principle of the human brain is gradually being revealed, and a breakthrough could be realized in AI using such achievements. ② Big data has its own complexity. In order to tackle the complexity behind big data and build a physical model conforming to the objective law, it is necessary to identify the physical mechanism behind the complexity. The above two aspects are logically consistent; that is, exploring the physical mechanism of complex systems and making effective use of them are the keys to dealing with complexity. Reflecting this logic, this paper advocates applying the principle and methods of mesoscience to AI.

《5. Conclusion》

5. Conclusion

The emergence of big data, along with the advancement of computing hardware, has prompted great development in AI, leading to its applications in many fields. However, due to certain problems inherent in deep learning, the interpretability of deep learning is limited. Although mesoscience originates from chemical engineering, its analytical methods—which include multilevel and multiscale analysis, as well as the idea of compromise in the competition of dominant mechanisms in the mesoregime—can also be applied to other complex systems. In recent years, mesoscience has achieved successful applications in different fields, and is expected to provide a novel concept to improve the interpretability of deep learning.

At present, the proposed mesoscience-based AI is a preliminary research idea, and its verification and expansion require the joint effort of researchers from various disciplines. In particular, exploration on its specific applications is required in future.

《Acknowledgements》

Acknowledgements

We would like to thank Dr. Wenlai Huang, Dr. Jianhua Chen, and Dr. Lin Zhang for the valuable discussion. We thank the editors and reviewers for their valuable comments about this article. We gratefully acknowledge the support from the National Natural Science Foundation of China (91834303).

《Compliance with ethics guidelines》

Compliance with ethics guidelines

Li Guo, Jun Wu, and Jinghai Li declare that they have no conflict of interest or financial conflicts to disclose.

京公网安备 11010502051620号

京公网安备 11010502051620号