In a recent paper [1], I discussed the concept of the “ocean of data,” in a response to ever-increasing computing power and large numbers of online data repositories. These settings call for a new paradigm of computational framework that connects various data repositories, incorporates machine learning, reuses existing data, and guides new computation and experimental efforts to create a “sustainable ecosystem” of data and tools. It is my hope that some recently available open-source codes can promote the development of pathways with lower barriers between individual data repositories and the ocean of data, and can add value to data processing in individual data repositories by communicating with the ocean of data, as schematically shown in Fig. 1 [1].

《Fig. 1》

Fig. 1. Schematic diagram of a “sustainable ecosystem” of data showing various data repositories (lakes), interconnections (flows), private data (percolation), processing (evaporation), collection (ocean), and reuse (condensation and precipitation) [1]. ESPEI: extensible, self-optimizing phase equilibrium infrastructure.

Thermodynamics is a science that concerns the state of a system—whether stable, metastable, or unstable—when interacting with the surroundings. The combination of the first and second laws of thermodynamics proposed by Gibbs [2,3] integrates the external and internal parts of a system. Even though Gibbs focused on the equilibrium of heterogeneous substances [2,4], the combined first and second laws of thermodynamics include both the equilibrium and non-equilibrium states of a system [5,6].

Thermodynamic modeling based on the calculation of phase diagram (CALPHAD) approach [6–9] establishes the Gibbs energy of individual phases across the complete space of the external and internal variables of the system, covering the stable, metastable, and unstable regions of each phase. In fact, the definition of the energy difference between the stable and non-stable structures of pure elements is the foundation of CALPHAD modeling, and was termed “lattice stability” by Kaufman, who pioneered the CALPHAD approach and coined the name [10,11]. The concept of lattice stability and the common acceptance of a set of lattice stability values have enabled the development of multicomponent databases with over 20 elements that have become the foundation of integrated computational materials engineering (ICME) [12] and the Materials Genome Initiative [13].

Before 2000, CALPHAD modeling relied almost exclusively on experimental information and some theoretical estimations, and its integration with the results from first-principles calculations based on density functional theory (DFT) [14] was rather limited [15]. The continued development of computation methods and software tools, particularly Vienna ab-initio simulation package (VASP) [16–18], has fueled the utilization of energetics from DFTbased first-principles calculations in CALPHAD modeling, and enabled the multidisciplinary information technology research (ITR) project “Computational Tools for Multicomponent Materials Design” in 2002, which was supported by the US National Science Foundation (NSF). This ITR project integrated the DFT and CALPHAD approaches with phase-field simulations and finiteelement methods [19]. The convergence of the DFT and CALPHAD methods along with the inspiration of the Human Genome Project [20] and the NSF-supported education program titled “An Integrated Education Program on Computational Thermodynamics, Kinetics, and Materials Design” [21] prompted me to coin the term “materials genome” in 2002 [22,23].

In 2009, I reviewed the first-principles calculations and CALPHAD modeling of thermodynamics [24]. My team established the extensible, self-optimizing phase equilibrium infrastructure (ESPEI) concept [25–27] that begins CALPHAD modeling using the thermochemical data of individual phases from first-principles calculations and refines model parameters using experimental phase equilibrium data. The significance of the ESPEI concept is threefold: ① First-principles calculations provide energetics as a function of internal degrees of freedom—that is, internally nonequilibrium configurations of each individual phase, which cannot be directly obtained from experiments because experimental data are usually for equilibrium states that are mixtures of many configurations [28–32]; ② ESPEI establishes a mechanism to efficiently evaluate model parameters, their statistic uncertainties, and uncertainty propagation in calculated properties [27,33]; and ③ the ESPEI data infrastructure integrates proto data and processed data from CALPHAD modeling and enables the efficient reuse of proto data and the effective updating and maintenance of processed data. With more and more publications on firstprinciples calculations, ubiquitous higher performance computing facilities, and large-scale online databases such as the Materials Project [34], open quantum materials database [35], and automatic flow for materials discovery [36] in the United States alone, I believe that the thermochemical data from DFT-based firstprinciples calculations will play an increasingly critical role in the CALPHAD modeling of a wide range of materials, particularly in the discovery and design of new materials.

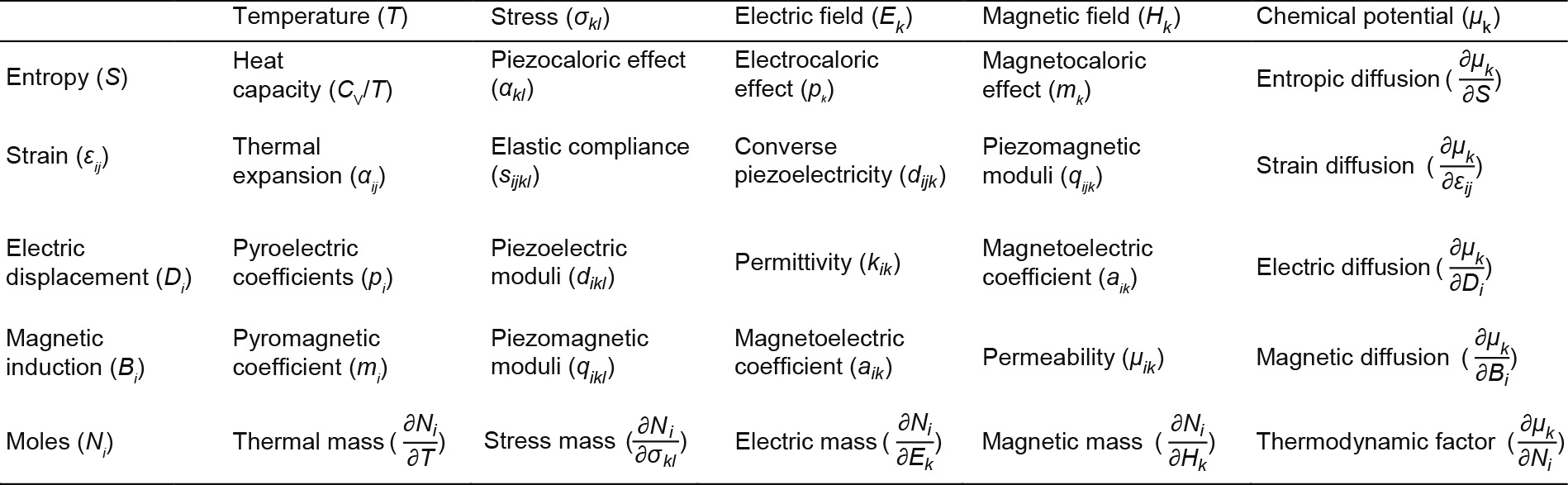

Materials design based on CALPHAD databases, thermodynamic calculations, and kinetic simulations has been systemized by Olson for developing new materials and improving existing materials [37,38]. This materials design approach connects controllable parameters in processing with measurable quantities in properties using microstructure attributes. The key foundational variable among a plethora of microstructure attributes is the phases that are formed; this is in accordance with the modeling of individual phases in the CALPHAD approach, which has been extended to a range of other properties. Some examples by my research group are shown in Table 1 [28–32,39–69]. It may also be mentioned that the second derivatives of energy with respect to its natural variables represent many physical properties, as shown in Figs. 2 and 3 [6,13,70], in which some temporary terms are assigned to the derivatives last column and last row in Fig. 2 and last column plus the compress heat in Fig. 3.

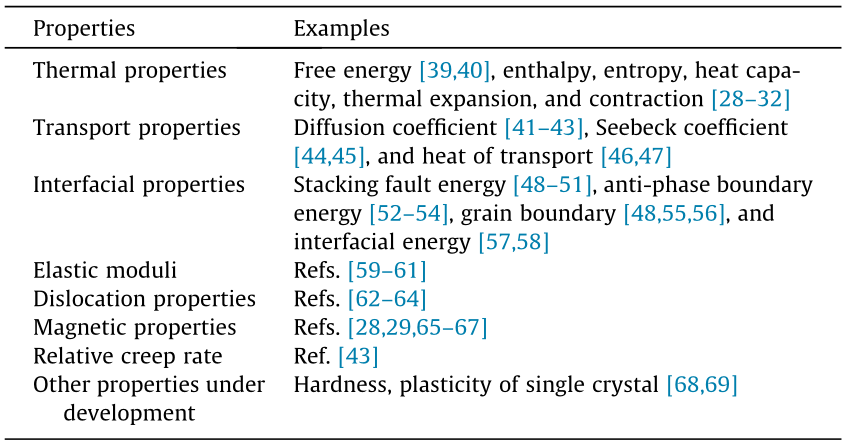

《Table 1》

Table 1 Examples of computed and modeled properties.

《Fig. 2》

Fig. 2. Physical quantities related to the second directives of internal energy with respect to its natural variables [6,13].

《Fig. 3》

Fig. 3. Physical quantities related to the second directives of Gibbs energy with respect to its natural variables [70].

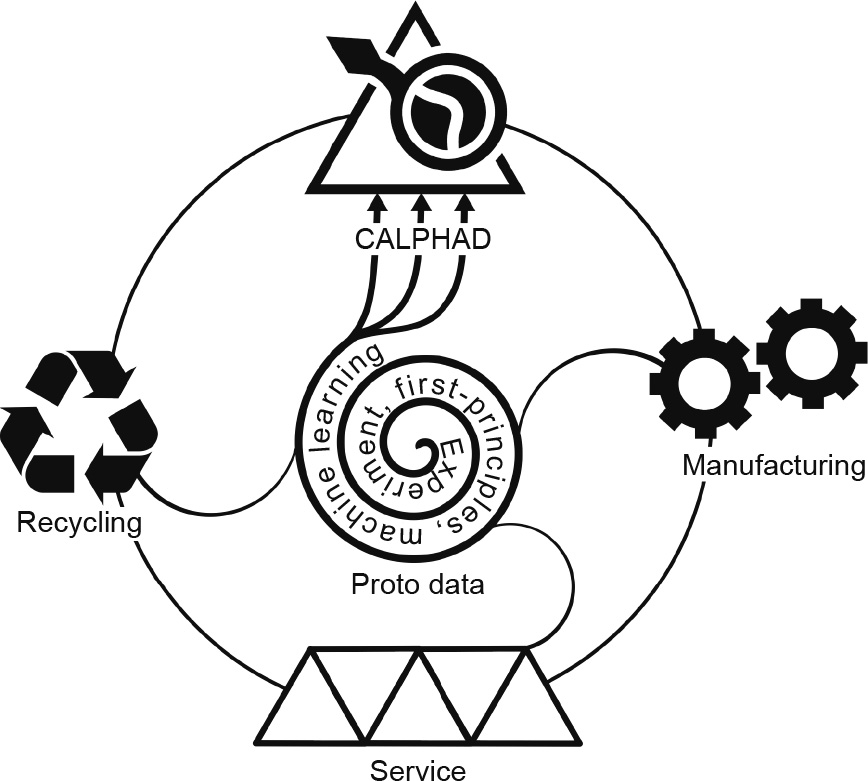

《Fig. 4》

Fig. 4. Schematic chart of the data ecosystem including proto data (experiment, first-principles calculations, and machine learning), processed data (modeling: CALPHAD with pycalphad [72,73] and ESPEI [25–27,74]), materials manufacturing, materials service, and materials recycling.

Materials design is the first step of the life-cycle of materials. After design, materials are manufactured and put into service, both of which generate new proto data that enrich or contrast the existing proto and processed data. Furthermore, materials recycling is becoming critical for both environmental concerns and materials expenses. As recycling often involves several materials, the chemistry of recycled materials can become more complex in comparison with the chemistry of the proto and processed data that were used to design each of the materials. These new proto data may thus necessitate additional first-principles calculations and the revision and expansion of processed data [71]. This is a critical connection for a sustainable data ecosystem, as shown in Fig. 4 [25–27,72–74], and it is a not trivial task, as current thermodynamic databases often contain more than 20 elements, albeit with limited proto data in the multidimensional space of external and internal variables [75–77]. It is my hope that our efforts in developing open-source software packages, DFTTK [78], pycalphad [72,73], and ESPEI [25–27,74] can inspire the community to develop new tools to further promote the materials research paradigm driven by science and computation [79].

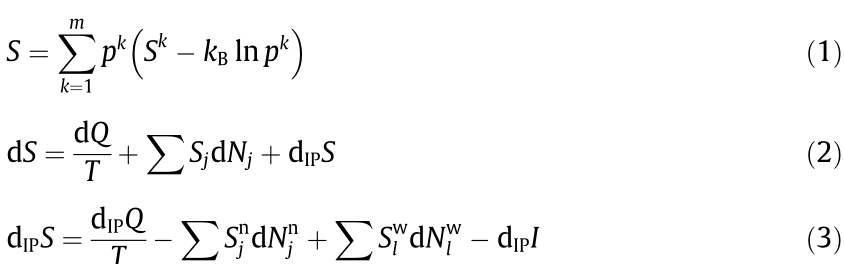

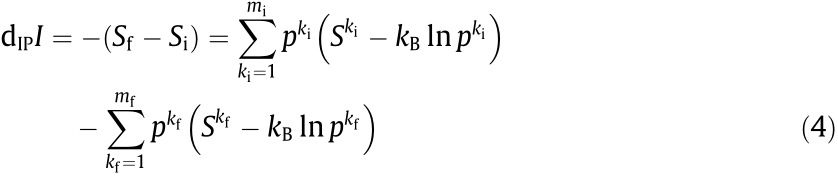

Additional challenges are related to multiscale complexity in materials in terms of both length and time scales, and how information passes between scales to produce microscopic and macroscopic behaviors [19]. We have recently shown that the following entropy equations hold promise for multiscale integrations of materials’ properties and information through entropy [80]:

Eq. (1) represents the total entropy of a system, S, calculated from the configurations at the scale k, with pk being the probability of configuration k  of the system and

of the system and  = 1, Sk being the entropy of each configuration in the scale k, and kB representing the Boltzmann constant. It is important to note that the entropy of the system consists of the configurational entropy at the scale of consideration plus the entropy of each individual configuration, and the probability of each configuration is related to the free energies of all configurations. Each individual configuration itself consists of another set of configurations, and Sk can thus be expressed in the same form as Eq. (1) with its own configurations. This division can continue until all important scales are considered. In the domain of materials science and engineering, where the focus is on the formation of phases, the atomic configurations represent the dominant scale with subscales of electronic and phonon density of states [39].

= 1, Sk being the entropy of each configuration in the scale k, and kB representing the Boltzmann constant. It is important to note that the entropy of the system consists of the configurational entropy at the scale of consideration plus the entropy of each individual configuration, and the probability of each configuration is related to the free energies of all configurations. Each individual configuration itself consists of another set of configurations, and Sk can thus be expressed in the same form as Eq. (1) with its own configurations. This division can continue until all important scales are considered. In the domain of materials science and engineering, where the focus is on the formation of phases, the atomic configurations represent the dominant scale with subscales of electronic and phonon density of states [39].

In Eq. (2), dS is the entropy change of the system, dQ is the amount of heat that the system receives from the surroundings, Sj is the partial molar entropy of component j in the surroundings or the system, dNj is the amount of component j that the system receives from (dNj > 0) or releases to the surroundings (dNj < 0), T is the temperature, and dIPS is the entropy production due to independent internal processes (IP). The first term on the righthand side of the equation is often how the concept of entropy is introduced in the thermodynamics of materials, while the second term is not discussed very much and is often buried in the direct introduction of chemical potential into the combined first and second laws of thermodynamics. The details of the third term, entropy production, are typically considered as part of kinetics and are not addressed in thermodynamics due to the usual consideration of equilibrium states only. It is worth noting that the entropy in the combined first and second laws of thermodynamics contains all three terms in Eq. (2) though often not explicitly stated [80].

Eq. (3) shows that the entropy production due to an internal process can be written in a similar form as Eq. (2) by defining the internal process as an IP-system [80]. This IP-system may consume some nutrients ( ) with partial entropy of

) with partial entropy of  , generate some waste (

, generate some waste ( ) with partial entropy of

) with partial entropy of  , produce heat (dIPQ ), and reorganize its configurations to produce a certain amount of information (dIPI ), which can be written as follows using Eq. (1):

, produce heat (dIPQ ), and reorganize its configurations to produce a certain amount of information (dIPI ), which can be written as follows using Eq. (1):

where the subscripts f and i represent the final and initial configurations of the IP-system. For a spontaneous/irreversible internal process, the entropy production represented by Eq. (3) must be positive, based on the second law of thermodynamics; however, the sign of dIPI can be either positive (generate information) or negative (erase information). Various thought experiments are discussed in Ref. [80]. It should be noted that the sign conventions in Eqs. (2) and (3) are opposite, with a positive sign indicating that a system receives heat and mass from the surroundings in Eq. (2), but indicating that an IP-system gives out heat and mass in Eq. (3) as they increase the entropy.

CALPHAD modeling based on the properties of individual phases has proven to be foundational for computational materials science and engineering. To further enhance the predictive power of the CALPHAD method, I suggest including configurations at relevant scales as shown by Eq. (1), so properties at various scales can be predicted, including the emergent behaviors that individual configurations do not possess. Extreme emergent behavior is observed in relation to the limit of the stability of a system when the derivative of temperature to entropy approaches zero from a positive value in its stable region [6]. Consequently, the derivative of entropy to temperature becomes positive infinite because temperature and entropy are conjugate variables in the combined law of thermodynamics—that is, the entropy change diverges, resulting from competition among the stable and metastable configurations shown by Eq. (1). It should be noted that not only is this divergence of the entropy of the system not a behavior that each individual configuration possesses, but also all molar, extensive quantities of the system diverge at the limit of stability. Furthermore, they may diverge negatively, such as thermal expansion represented by the derivative of volume to temperature, because volume and temperature are not conjugate variables in the combined law of thermodynamics [6]. This has been demonstrated by us for cerium, with a positive divergence, and for Fe3Pt, with a negative divergence, in terms of thermal expansion [28–30]. It should also be noted that the derivative of volume to temperature is equal to the negative derivative of entropy to pressure which is named as "ompress heat,” due to the Maxwell relations [6] so the quantities in Figs 2 and 3 in the present article are symmetrical.

京公网安备 11010502051620号

京公网安备 11010502051620号